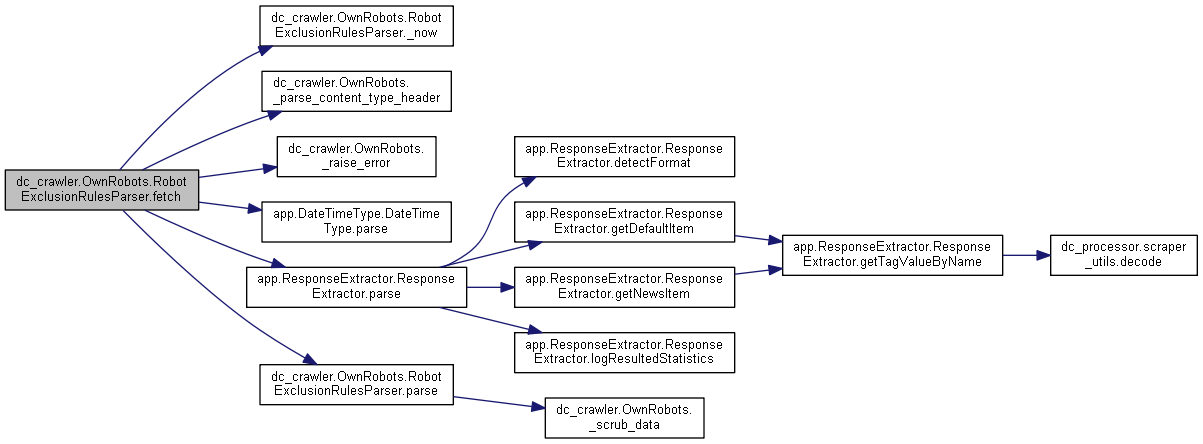

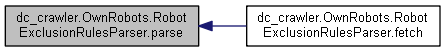

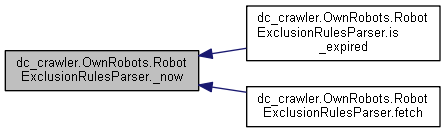

399 def fetch(self, url, timeout=None):

400 """Attempts to fetch the URL requested which should refer to a 401 robots.txt file, e.g. http://example.com/robots.txt. 407 encoding =

"iso-8859-1" 409 expires_header =

None 410 content_type_header =

None 411 self._response_code = 0

412 self._source_url = url

415 req = urllib_request.Request(url,

None, {

'User-Agent' : self.user_agent })

417 req = urllib_request.Request(url)

421 f = urllib_request.urlopen(req, timeout=timeout)

423 f = urllib_request.urlopen(req)

425 content = f.read(MAX_FILESIZE)

428 expires_header = f.info().get(

"expires")

429 content_type_header = f.info().get(

"Content-Type")

432 if hasattr(f,

"code"):

433 self._response_code = f.code

435 self._response_code = 200

437 except urllib_error.URLError:

440 error_instance = sys.exc_info()

441 if len(error_instance) > 1:

442 error_instance = error_instance[1]

443 if hasattr(error_instance,

"code"):

444 self._response_code = error_instance.code

452 self.expiration_date =

None 453 if self._response_code >= 200

and self._response_code < 300:

456 self.expiration_date = email_utils.parsedate_tz(expires_header)

458 if self.expiration_date:

475 if self.expiration_date[9] ==

None:

476 self.expiration_date = self.expiration_date[:9] + (0,)

478 self.expiration_date = email_utils.mktime_tz(self.expiration_date)

479 if self.use_local_time:

482 self.expiration_date = time.mktime(time.gmtime(self.expiration_date))

486 if not self.expiration_date: self.expiration_date = self._now() + SEVEN_DAYS

488 if (self._response_code >= 200)

and (self._response_code < 300):

497 encoding =

"iso-8859-1" 498 elif self._response_code

in (401, 403):

501 content =

"User-agent: *\nDisallow: /\n" 502 elif self._response_code == 404:

507 _raise_error(urllib_error.URLError, self._response_code)

509 if((PY_MAJOR_VERSION == 2)

and isinstance(content, str))

or \

510 ((PY_MAJOR_VERSION > 2)

and (

not isinstance(content, str))):

516 content = content.decode(encoding)

519 "Robots.txt contents are not in the encoding expected (%s)." % encoding)

520 except (LookupError, ValueError):

525 _raise_error(UnicodeError,

"I don't understand the encoding \"%s\"." % encoding)

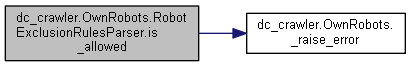

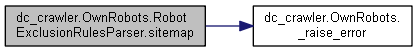

def _raise_error(error, message)

def _parse_content_type_header(header)