|

| def | __init__ (self, headers=None, isCacheUsing=False, robotsFileDir=None) |

| |

| def | initFiends (self, headers=None, isCacheUsing=False, robotsFileDir=None) |

| |

| def | loadRobots (self, url, siteId=None, additionHeaders=None, proxyName=None) |

| |

| def | checkUrlByRobots (self, url, siteId=None, headers=None) |

| |

Definition at line 21 of file RobotsParser.py.

◆ __init__()

| def dc_crawler.RobotsParser.RobotsParser.__init__ |

( |

|

self, |

|

|

|

headers = None, |

|

|

|

isCacheUsing = False, |

|

|

|

robotsFileDir = None |

|

) |

| |

Definition at line 30 of file RobotsParser.py.

30 def __init__(self, headers=None, isCacheUsing=False, robotsFileDir=None):

31 self.localParser =

None 33 self.robotsFileDir =

None 34 self.cacheElement =

None 35 self.cacheElementKeys =

None 36 self.localCrawlerDataStorage =

None 37 self.initFiends(headers, isCacheUsing, robotsFileDir)

def __init__(self)

constructor

◆ checkUrlByRobots()

| def dc_crawler.RobotsParser.RobotsParser.checkUrlByRobots |

( |

|

self, |

|

|

|

url, |

|

|

|

siteId = None, |

|

|

|

headers = None |

|

) |

| |

Definition at line 105 of file RobotsParser.py.

105 def checkUrlByRobots(self, url, siteId=None, headers=None):

108 if self.localParser

is not None:

109 if headers

is not None and self.USER_AGENT_HEADER_NAME

in headers

and \

110 headers[self.USER_AGENT_HEADER_NAME]

is not None:

111 self.headers = [headers[self.USER_AGENT_HEADER_NAME]]

112 self.headers.append(

"*")

114 for userAgent

in self.headers:

115 retUserAgent = userAgent

116 isAllowed = self.localParser.is_allowed(userAgent, url)

119 if not isAllowed

and self.USER_AGENT_HEADER_NAME

in self.headers:

120 retUserAgent = self.headers[self.USER_AGENT_HEADER_NAME]

122 if self.localCrawlerDataStorage

is not None and siteId

is not None and \

123 self.cacheElement

is not None and self.cacheElementKeys

is not None:

124 host = Utils.UrlParser.getDomain(url)

126 self.cacheElement[self.cacheElementKeys[0]][self.cacheElementKeys[1]] += 1

127 self.localCrawlerDataStorage.saveElement(self.robotsFileDir, host, siteId, self.cacheElement)

128 return isAllowed, retUserAgent

◆ initFiends()

| def dc_crawler.RobotsParser.RobotsParser.initFiends |

( |

|

self, |

|

|

|

headers = None, |

|

|

|

isCacheUsing = False, |

|

|

|

robotsFileDir = None |

|

) |

| |

Definition at line 45 of file RobotsParser.py.

45 def initFiends(self, headers=None, isCacheUsing=False, robotsFileDir=None):

49 self.headers = headers

50 self.robotsFileDir = robotsFileDir

52 self.localCrawlerDataStorage = LFSDataStorage()

54 self.localCrawlerDataStorage =

None

◆ loadRobots()

| def dc_crawler.RobotsParser.RobotsParser.loadRobots |

( |

|

self, |

|

|

|

url, |

|

|

|

siteId = None, |

|

|

|

additionHeaders = None, |

|

|

|

proxyName = None |

|

) |

| |

Definition at line 64 of file RobotsParser.py.

64 def loadRobots(self, url, siteId=None, additionHeaders=None, proxyName=None):

65 if additionHeaders

is None:

68 if self.localCrawlerDataStorage

is not None and self.robotsFileDir

is not None and siteId

is not None:

69 host = Utils.UrlParser.getDomain(url)

71 self.cacheElement = self.localCrawlerDataStorage.loadElement(self.robotsFileDir, host, siteId)

72 if self.cacheElement

is not None:

73 self.cacheElementKeys =

None 74 cek = self.localCrawlerDataStorage.fetchLowFreqHeaders(fileStorageElements=self.cacheElement,

76 if len(cek) > 0

and cek[0][0] ==

"robots.txt":

77 self.cacheElementKeys = cek[0]

78 contentBuf = self.cacheElementKeys[1]

79 if contentBuf

is None:

80 robotsUrl = self.ROBOTS_PATTERN.sub(

r'\1/robots.txt', url)

81 logger.info(

">>> robotsUrl: " + robotsUrl)

83 if proxyName

is not None and proxyName:

84 response = requests.get(robotsUrl, headers=additionHeaders, allow_redirects=

True,

85 proxies={

"http":

"http://" + proxyName})

87 response = requests.get(robotsUrl, headers=additionHeaders, allow_redirects=

True)

88 if response

is not None and response.status_code == self.HTTP_OK_CODE:

89 contentBuf = response.content

91 logger.info(

">>> robots.txt loading error, response is None or status_code not 200")

92 except Exception

as excp:

93 logger.info(

">>> robots.txt loading error = " + str(excp))

95 if contentBuf

is not None:

96 self.localParser = OwnRobots.RobotExclusionRulesParser()

97 self.localParser.parse(contentBuf)

98 return not self.localParser

is None

◆ cacheElement

| dc_crawler.RobotsParser.RobotsParser.cacheElement |

◆ cacheElementKeys

| dc_crawler.RobotsParser.RobotsParser.cacheElementKeys |

◆ headers

| dc_crawler.RobotsParser.RobotsParser.headers |

◆ HTTP_OK_CODE

| int dc_crawler.RobotsParser.RobotsParser.HTTP_OK_CODE = 200 |

|

static |

◆ localCrawlerDataStorage

| dc_crawler.RobotsParser.RobotsParser.localCrawlerDataStorage |

◆ localParser

| dc_crawler.RobotsParser.RobotsParser.localParser |

◆ ROBOTS_PATTERN

| dc_crawler.RobotsParser.RobotsParser.ROBOTS_PATTERN = re.compile(r'(https?://[^/]+).*', re.I) |

|

static |

◆ robotsFileDir

| dc_crawler.RobotsParser.RobotsParser.robotsFileDir |

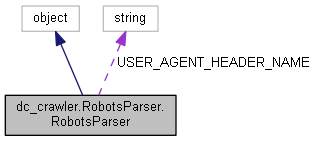

◆ USER_AGENT_HEADER_NAME

| string dc_crawler.RobotsParser.RobotsParser.USER_AGENT_HEADER_NAME = "User-Agent" |

|

static |

The documentation for this class was generated from the following file: