Functions | |

| def | process (input_data) |

| def | getContent (url) |

Variables | |

| filename | |

| filemode | |

| logger = logging.getLogger("search_engine") | |

| def | output = process(input_url) |

Detailed Description

HCE project, Python bindings, Distributed Tasks Manager application. Event objects definitions. @package: dc @file prepairer.py @author Oleksii <developers.hce@gmail.com> @link: http://hierarchical-cluster-engine.com/ @copyright: Copyright © 2013-2014 IOIX Ukraine @license: http://hierarchical-cluster-engine.com/license/ @since: 0.1

Function Documentation

◆ getContent()

| def search_engine_parser.getContent | ( | url | ) |

Definition at line 62 of file search_engine_parser.py.

63 # wget -S --no-check-certificate -U "Mozilla/5.0 (Windows; U; Windows NT 5.1; de; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3" "https://www.google.com/search?q=mac+os"

64 cmd = "wget -qO- -S --no-check-certificate -U 'Mozilla/5.0 (Windows; U; Windows NT 5.1; de; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3' '" + url + "'"

65 # cmd = "wget -qO- -S --no-check-certificate -U 'Mozilla/5.0 (Windows; U; Windows NT 5.1; de; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3' 'https://www.google.com/search?q=mac+os'"

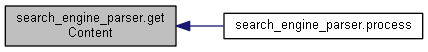

Here is the caller graph for this function:

◆ process()

| def search_engine_parser.process | ( | input_data | ) |

Definition at line 38 of file search_engine_parser.py.

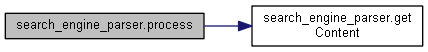

Here is the call graph for this function:

Variable Documentation

◆ filemode

| search_engine_parser.filemode |

Definition at line 33 of file search_engine_parser.py.

◆ filename

| search_engine_parser.filename |

Definition at line 33 of file search_engine_parser.py.

◆ logger

| search_engine_parser.logger = logging.getLogger("search_engine") |

Definition at line 34 of file search_engine_parser.py.

◆ output

| def search_engine_parser.output = process(input_url) |

Definition at line 86 of file search_engine_parser.py.