Definition at line 30 of file CrawlingOptimiser.py.

◆ __init__()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.__init__ |

( |

|

self | ) |

|

Definition at line 41 of file CrawlingOptimiser.py.

43 foundation.CementApp.__init__(self)

44 self.exit_code = CONSTS.EXIT_SUCCESS

46 self.message_queue = []

49 self.recrawl_dict = {}

50 self.site_features = {}

51 self.local_wrapper =

None 52 self.remote_wrapper =

None 53 self.remote_host =

None def __init__(self)

constructor

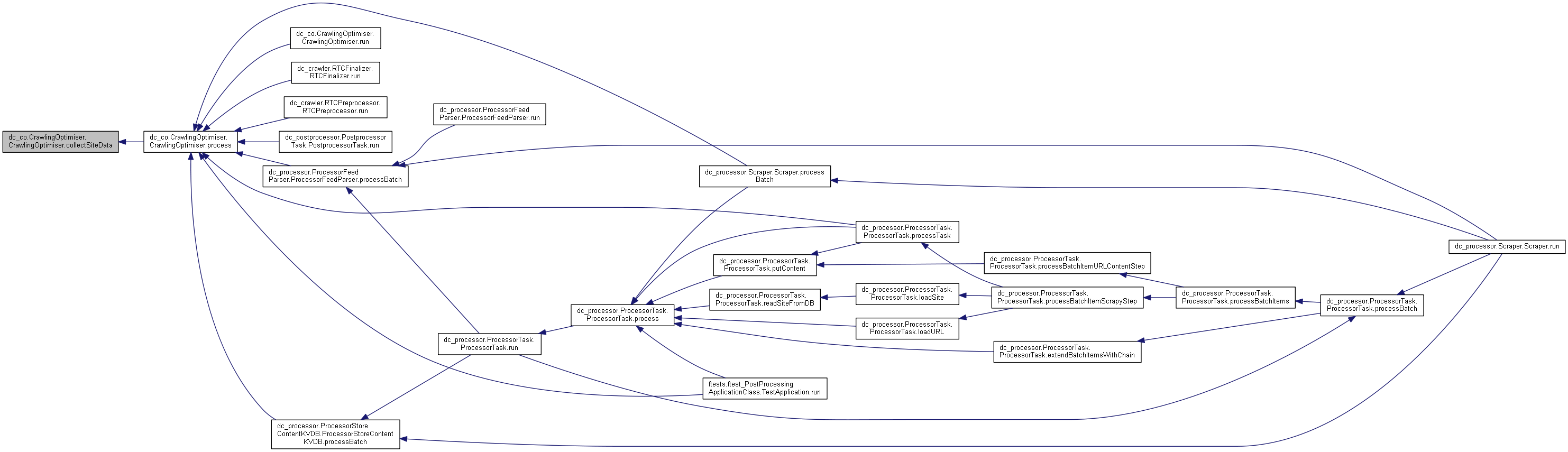

◆ collectSiteData()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.collectSiteData |

( |

|

self | ) |

|

Definition at line 172 of file CrawlingOptimiser.py.

172 def collectSiteData(self):

174 if self.site_id

is not None:

177 query = CONSTS.SQL_QUERY_NEW_URLS % (self.site_id, self.site_id, self.site_id)

178 response = self.remote_wrapper.customRequest(query, CONSTS.DB_URLS)

179 if response

is not None:

180 self.logger.info(

"response: %s" % str(response))

181 site_data_dict[

"Contents"] = response[0][0]

182 site_data_dict[

"LastAdded"] = response[0][1]

183 site_data_dict[

"minPDate"] = response[0][2]

184 site_data_dict[

"maxPDate"] = response[0][3]

187 query = CONSTS.SQL_QUERY_RECRAWL_PERIOD_START % (self.site_id)

188 response = self.remote_wrapper.customRequest(query, CONSTS.DB_URLS)

189 if response

is not None:

190 self.logger.info(

"response: %s" % str(response))

191 site_data_dict[

"RecrawlStart"] = response[0][0]

194 query = CONSTS.SQL_QUERY_RECRAWL_PERIOD_END % (self.site_id)

195 response = self.remote_wrapper.customRequest(query, CONSTS.DB_URLS)

196 if response

is not None:

197 self.logger.info(

"response: %s" % str(response))

198 site_data_dict[

"RecrawlEnd"] = response[0][0]

200 except Exception, err:

201 self.logger.

error(CONSTS.MSG_ERROR_COLLECT_SITE_DATA +

' ' + str(err))

202 self.logger.info(

"site_data_dict: %s" % str(site_data_dict))

203 return site_data_dict

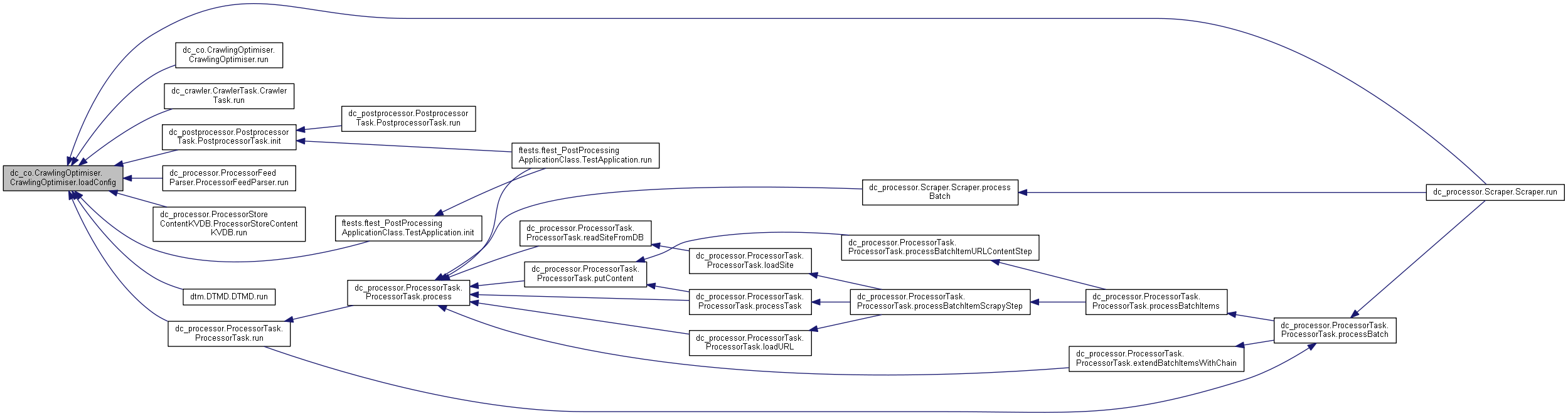

◆ loadConfig()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.loadConfig |

( |

|

self | ) |

|

Definition at line 89 of file CrawlingOptimiser.py.

91 self.config = ConfigParser.ConfigParser()

92 self.config.optionxform = str

96 self.config.read(self.pargs.config)

97 self.message_queue.append(CONSTS.MSG_INFO_LOAD_CONFIG_FILE + str(self.pargs.config))

99 self.config.read(CONSTS.DEFAULT_CFG_FILE)

100 self.message_queue.append(CONSTS.MSG_INFO_LOAD_DEFAULT_CONFIG_FILE + CONSTS.DEFAULT_CFG_FILE)

104 self.site_id = self.pargs.site

105 self.message_queue.append(CONSTS.MSG_INFO_LOAD_SITE_ID + str(self.pargs.site))

107 self.site_id = CONSTS.SITE_ALL

108 self.message_queue.append(CONSTS.MSG_INFO_LOAD_DEFAULT_SITE_ID + str(CONSTS.SITE_ALL))

110 except Exception, err:

111 print CONSTS.MSG_ERROR_LOAD_CONFIG, err.message

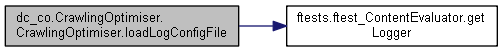

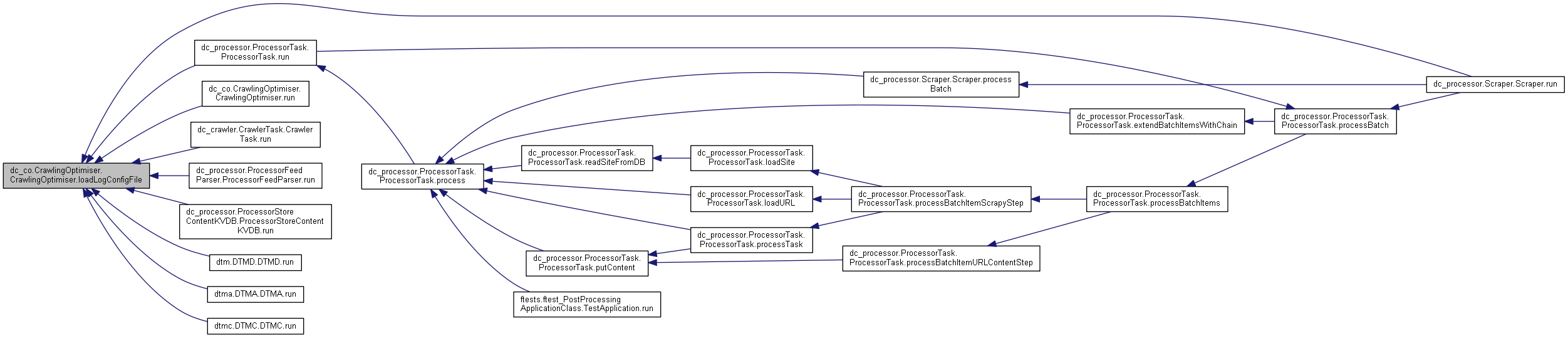

◆ loadLogConfigFile()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.loadLogConfigFile |

( |

|

self | ) |

|

Definition at line 118 of file CrawlingOptimiser.py.

121 log_conf_file = self.config.get(

"Application",

"log")

122 logging.config.fileConfig(log_conf_file)

123 self.logger = Utils.MPLogger().

getLogger()

124 except Exception, err:

125 print CONSTS.MSG_ERROR_LOAD_LOG_CONFIG_FILE, err.message

def loadLogConfigFile(self)

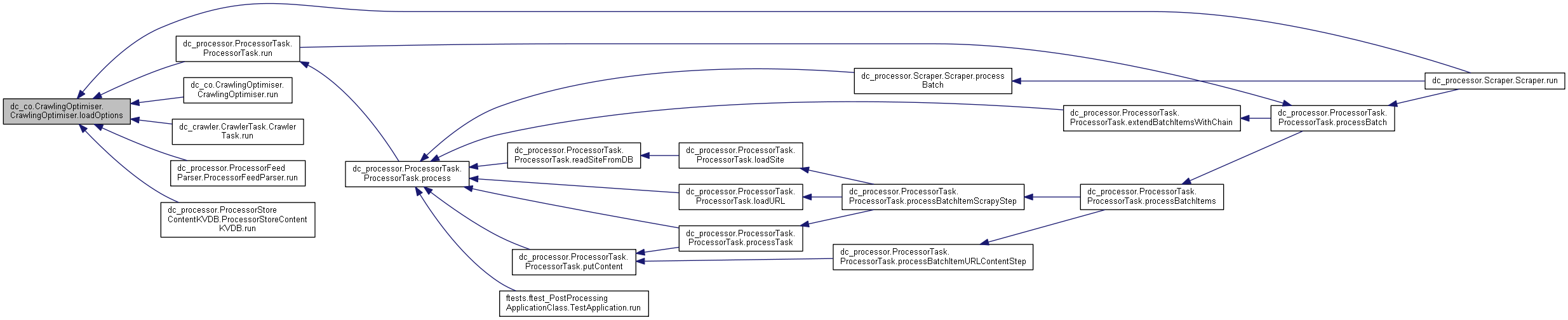

◆ loadOptions()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.loadOptions |

( |

|

self | ) |

|

Definition at line 132 of file CrawlingOptimiser.py.

135 remote_db_task_ini = self.config.get(self.__class__.__name__,

"db-task_ini_remote")

136 remote_cfgParser = ConfigParser.ConfigParser()

137 remote_cfgParser.read(remote_db_task_ini)

138 self.remote_wrapper = DBTasksWrapper(remote_cfgParser)

139 self.remote_host = remote_cfgParser.get(

"TasksManager",

"db_host")

141 local_db_task_ini = self.config.get(self.__class__.__name__,

"db-task_ini_local")

142 local_cfgParser = ConfigParser.ConfigParser()

143 local_cfgParser.read(local_db_task_ini)

144 self.local_wrapper = DBTasksWrapper(local_cfgParser)

145 except Exception, err:

146 self.logger.

error(CONSTS.MSG_ERROR_LOAD_LOG_CONFIG_FILE)

147 self.logger.

error(str(err.message))

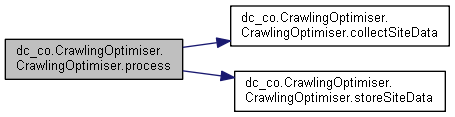

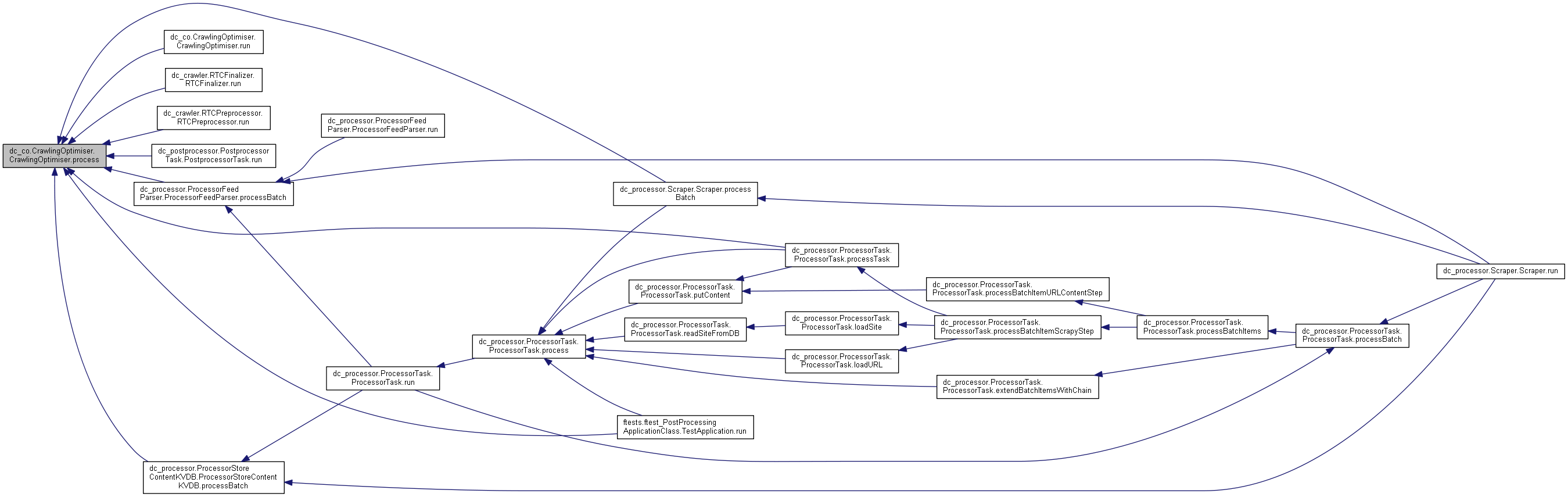

◆ process()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.process |

( |

|

self | ) |

|

Definition at line 154 of file CrawlingOptimiser.py.

156 for msg

in self.message_queue:

157 self.logger.info(msg)

159 if self.site_id

is not None:

162 self.recrawl_dict[self.site_id] = self.collectSiteData()

163 self.logger.info(

"self.recrawl_dict: %s" % str(self.recrawl_dict))

166 except Exception, err:

167 self.logger.

error(CONSTS.MSG_ERROR_PROCESS_GENERAL +

' ' + str(err))

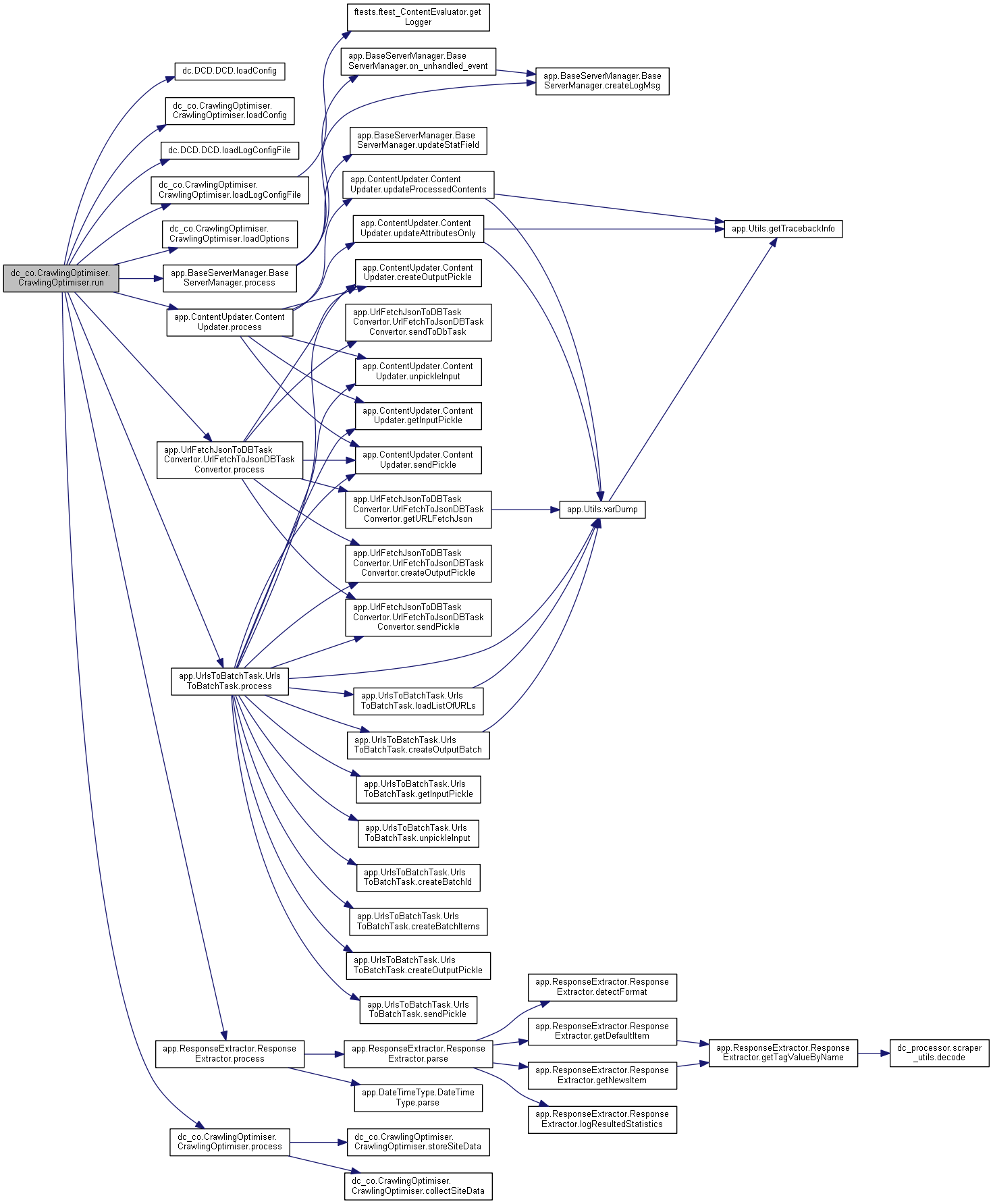

◆ run()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.run |

( |

|

self | ) |

|

Definition at line 66 of file CrawlingOptimiser.py.

68 foundation.CementApp.run(self)

74 self.loadLogConfigFile()

83 self.logger.info(APP_CONSTS.LOGGER_DELIMITER_LINE)

◆ setup()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.setup |

( |

|

self | ) |

|

Definition at line 57 of file CrawlingOptimiser.py.

59 foundation.CementApp.setup(self)

60 self.args.add_argument(

'-c',

'--config', action=

'store', metavar=

'config_file', help=

'config ini-file')

61 self.args.add_argument(

'-s',

'--site', action=

'store', metavar=

'site alias', help=

'site alias')

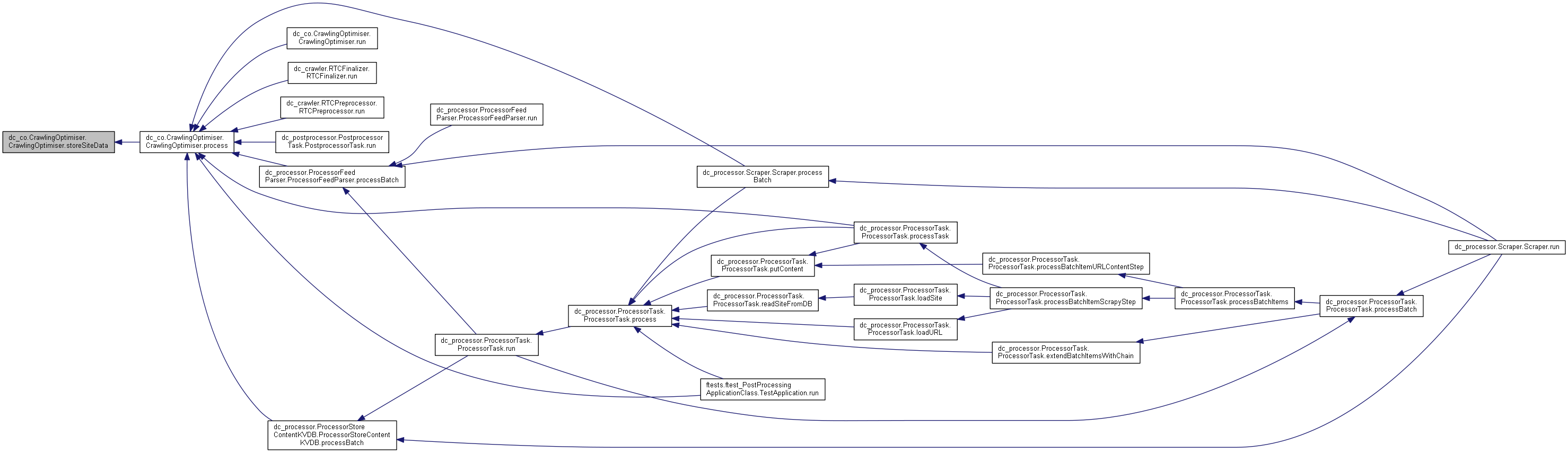

◆ storeSiteData()

| def dc_co.CrawlingOptimiser.CrawlingOptimiser.storeSiteData |

( |

|

self | ) |

|

Definition at line 208 of file CrawlingOptimiser.py.

208 def storeSiteData(self):

212 query = CONSTS.SQL_QUERY_NEW_SITE_TABLE % (self.site_id)

213 response = self.local_wrapper.customRequest(query, CONSTS.DB_CO)

214 if response

is not None:

215 self.logger.info(

"response: %s" % str(response))

218 query = CONSTS.SQL_QUERY_INSERT_SITE_DATA % \

221 self.recrawl_dict[self.site_id][

"Contents"], \

222 self.recrawl_dict[self.site_id][

"RecrawlStart"], \

223 self.recrawl_dict[self.site_id][

"RecrawlEnd"], \

224 self.recrawl_dict[self.site_id][

"minPDate"], \

225 self.recrawl_dict[self.site_id][

"maxPDate"], \

226 self.recrawl_dict[self.site_id][

"LastAdded"], \

227 self.recrawl_dict[self.site_id][

"Contents"], \

228 self.recrawl_dict[self.site_id][

"LastAdded"], \

229 self.recrawl_dict[self.site_id][

"minPDate"], \

230 self.recrawl_dict[self.site_id][

"maxPDate"])

231 response = self.local_wrapper.customRequest(query, CONSTS.DB_CO)

232 if response

is not None:

233 self.logger.info(

"response: %s" % str(response))

235 self.logger.info(

"Zero contents.")

236 except Exception, err:

237 self.logger.

error(CONSTS.MSG_ERROR_STORE_SITE_DATA +

' ' + str(err))

◆ config

| dc_co.CrawlingOptimiser.CrawlingOptimiser.config |

◆ exit_code

| dc_co.CrawlingOptimiser.CrawlingOptimiser.exit_code |

◆ local_wrapper

| dc_co.CrawlingOptimiser.CrawlingOptimiser.local_wrapper |

◆ logger

| dc_co.CrawlingOptimiser.CrawlingOptimiser.logger |

◆ message_queue

| dc_co.CrawlingOptimiser.CrawlingOptimiser.message_queue |

◆ recrawl_dict

| dc_co.CrawlingOptimiser.CrawlingOptimiser.recrawl_dict |

◆ remote_host

| dc_co.CrawlingOptimiser.CrawlingOptimiser.remote_host |

◆ remote_wrapper

| dc_co.CrawlingOptimiser.CrawlingOptimiser.remote_wrapper |

◆ site_features

| dc_co.CrawlingOptimiser.CrawlingOptimiser.site_features |

◆ site_id

| dc_co.CrawlingOptimiser.CrawlingOptimiser.site_id |

The documentation for this class was generated from the following file: