Classes | |

| class | Meta |

| class | ScraperCustomJson |

Functions | |

| def | __init__ (self, usageModel=APP_CONSTS.APP_USAGE_MODEL_PROCESS, configFile=None, logger=None, inputData=None) |

| def | setup (self) |

| def | run (self) |

| def | loadConfig (self) |

| def | loadLogConfigFile (self) |

| def | loadOptions (self) |

| def | loadScraperProperties (self) |

| def | processBatch (self) |

| def | loadExtractors (self) |

| def | createModule (self, module_name) |

| def | getNextBestExtractor (self) |

| def | resourceExtraction (self, jsonElem) |

| def | formatOutpuElement (self, elem, localOutputFormat) |

| def | formatOutputData (self, response, localOutputFormat) |

| def | jsonParserExtractor (self, jsonElem) |

| def | getProcessedContent (self, result) |

| def | fillScraperResponse (self, jsonElem) |

| def | generateEmptyResponse (self) |

| def | jsonParserProcess (self) |

| def | getExitCode (self) |

Variables | |

| int | ERROR_OK = 0 |

| int | EXIT_SUCCESS = 0 |

| int | EXIT_FAILURE = 1 |

| string | MSG_ERROR_LOAD_EXTRACTORS = "Error load extractors " |

| string | ENV_SCRAPER_STORE_PATH = "ENV_SCRAPER_STORE_PATH" |

| list | TAGS_DATETIME_NEWS_NAMES = [CONSTS.TAG_PUB_DATE, CONSTS.TAG_DC_DATE] |

| string | MSG_ERROR_WRONG_CONFIG_FILE_NAME = "Config file name is wrong" |

| list | TAGS_DATETIME_TEMPLATE_TYPES = [CONSTS.TAG_TYPE_DATETIME] |

| string | OPTION_SECTION_DATETIME_TEMPLATE_TYPES = 'tags_datetime_template_types' |

| exitCode | |

| usageModel | |

| configFile | |

| logger | |

| input_data | |

| properties | |

| extractor | |

| extractors | |

| itr | |

| pubdate | |

| timezone | |

| errorMask | |

| scraperPropFileName | |

| algorithm_name | |

| scraperResponses | |

| tagsCount | |

| tagsMask | |

| processedContent | |

| outputFormat | |

| metrics | |

| altTagsMask | |

| urlHost | |

| output_data | |

| dbWrapper | |

| datetimeTemplateTypes | |

| useCurrentYear | |

| config | |

Function Documentation

◆ __init__()

| def dc_processor.ScraperCustomJson.__init__ | ( | self, | |

usageModel = APP_CONSTS.APP_USAGE_MODEL_PROCESS, |

|||

configFile = None, |

|||

logger = None, |

|||

inputData = None |

|||

| ) |

Definition at line 85 of file ScraperCustomJson.py.

◆ createModule()

| def dc_processor.ScraperCustomJson.createModule | ( | self, | |

| module_name | |||

| ) |

Definition at line 374 of file ScraperCustomJson.py.

◆ fillScraperResponse()

| def dc_processor.ScraperCustomJson.fillScraperResponse | ( | self, | |

| jsonElem | |||

| ) |

Definition at line 566 of file ScraperCustomJson.py.

◆ formatOutpuElement()

| def dc_processor.ScraperCustomJson.formatOutpuElement | ( | self, | |

| elem, | |||

| localOutputFormat | |||

| ) |

Definition at line 433 of file ScraperCustomJson.py.

◆ formatOutputData()

| def dc_processor.ScraperCustomJson.formatOutputData | ( | self, | |

| response, | |||

| localOutputFormat | |||

| ) |

Definition at line 454 of file ScraperCustomJson.py.

◆ generateEmptyResponse()

| def dc_processor.ScraperCustomJson.generateEmptyResponse | ( | self | ) |

Definition at line 578 of file ScraperCustomJson.py.

◆ getExitCode()

| def dc_processor.ScraperCustomJson.getExitCode | ( | self | ) |

Definition at line 618 of file ScraperCustomJson.py.

◆ getNextBestExtractor()

| def dc_processor.ScraperCustomJson.getNextBestExtractor | ( | self | ) |

Definition at line 385 of file ScraperCustomJson.py.

◆ getProcessedContent()

| def dc_processor.ScraperCustomJson.getProcessedContent | ( | self, | |

| result | |||

| ) |

Definition at line 548 of file ScraperCustomJson.py.

◆ jsonParserExtractor()

| def dc_processor.ScraperCustomJson.jsonParserExtractor | ( | self, | |

| jsonElem | |||

| ) |

Definition at line 467 of file ScraperCustomJson.py.

◆ jsonParserProcess()

| def dc_processor.ScraperCustomJson.jsonParserProcess | ( | self | ) |

Definition at line 588 of file ScraperCustomJson.py.

◆ loadConfig()

| def dc_processor.ScraperCustomJson.loadConfig | ( | self | ) |

Definition at line 157 of file ScraperCustomJson.py.

◆ loadExtractors()

| def dc_processor.ScraperCustomJson.loadExtractors | ( | self | ) |

Definition at line 338 of file ScraperCustomJson.py.

◆ loadLogConfigFile()

| def dc_processor.ScraperCustomJson.loadLogConfigFile | ( | self | ) |

Definition at line 175 of file ScraperCustomJson.py.

◆ loadOptions()

| def dc_processor.ScraperCustomJson.loadOptions | ( | self | ) |

Definition at line 189 of file ScraperCustomJson.py.

◆ loadScraperProperties()

| def dc_processor.ScraperCustomJson.loadScraperProperties | ( | self | ) |

Definition at line 223 of file ScraperCustomJson.py.

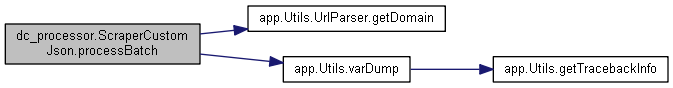

◆ processBatch()

| def dc_processor.ScraperCustomJson.processBatch | ( | self | ) |

Definition at line 235 of file ScraperCustomJson.py.

◆ resourceExtraction()

| def dc_processor.ScraperCustomJson.resourceExtraction | ( | self, | |

| jsonElem | |||

| ) |

Definition at line 394 of file ScraperCustomJson.py.

◆ run()

| def dc_processor.ScraperCustomJson.run | ( | self | ) |

◆ setup()

| def dc_processor.ScraperCustomJson.setup | ( | self | ) |

Definition at line 122 of file ScraperCustomJson.py.

Variable Documentation

◆ algorithm_name

| dc_processor.ScraperCustomJson.algorithm_name |

Definition at line 104 of file ScraperCustomJson.py.

◆ altTagsMask

| dc_processor.ScraperCustomJson.altTagsMask |

Definition at line 112 of file ScraperCustomJson.py.

◆ config

| dc_processor.ScraperCustomJson.config |

Definition at line 159 of file ScraperCustomJson.py.

◆ configFile

| dc_processor.ScraperCustomJson.configFile |

Definition at line 93 of file ScraperCustomJson.py.

◆ datetimeTemplateTypes

| dc_processor.ScraperCustomJson.datetimeTemplateTypes |

Definition at line 117 of file ScraperCustomJson.py.

◆ dbWrapper

| dc_processor.ScraperCustomJson.dbWrapper |

Definition at line 116 of file ScraperCustomJson.py.

◆ ENV_SCRAPER_STORE_PATH

| string dc_processor.ScraperCustomJson.ENV_SCRAPER_STORE_PATH = "ENV_SCRAPER_STORE_PATH" |

Definition at line 65 of file ScraperCustomJson.py.

◆ ERROR_OK

| int dc_processor.ScraperCustomJson.ERROR_OK = 0 |

Definition at line 57 of file ScraperCustomJson.py.

◆ errorMask

| dc_processor.ScraperCustomJson.errorMask |

Definition at line 102 of file ScraperCustomJson.py.

◆ EXIT_FAILURE

| int dc_processor.ScraperCustomJson.EXIT_FAILURE = 1 |

Definition at line 61 of file ScraperCustomJson.py.

◆ EXIT_SUCCESS

| int dc_processor.ScraperCustomJson.EXIT_SUCCESS = 0 |

Definition at line 60 of file ScraperCustomJson.py.

◆ exitCode

| dc_processor.ScraperCustomJson.exitCode |

Definition at line 91 of file ScraperCustomJson.py.

◆ extractor

| dc_processor.ScraperCustomJson.extractor |

Definition at line 97 of file ScraperCustomJson.py.

◆ extractors

| dc_processor.ScraperCustomJson.extractors |

Definition at line 98 of file ScraperCustomJson.py.

◆ input_data

| dc_processor.ScraperCustomJson.input_data |

Definition at line 95 of file ScraperCustomJson.py.

◆ itr

| dc_processor.ScraperCustomJson.itr |

Definition at line 99 of file ScraperCustomJson.py.

◆ logger

| dc_processor.ScraperCustomJson.logger |

Definition at line 94 of file ScraperCustomJson.py.

◆ metrics

| dc_processor.ScraperCustomJson.metrics |

Definition at line 111 of file ScraperCustomJson.py.

◆ MSG_ERROR_LOAD_EXTRACTORS

| string dc_processor.ScraperCustomJson.MSG_ERROR_LOAD_EXTRACTORS = "Error load extractors " |

Definition at line 63 of file ScraperCustomJson.py.

◆ MSG_ERROR_WRONG_CONFIG_FILE_NAME

| string dc_processor.ScraperCustomJson.MSG_ERROR_WRONG_CONFIG_FILE_NAME = "Config file name is wrong" |

Definition at line 72 of file ScraperCustomJson.py.

◆ OPTION_SECTION_DATETIME_TEMPLATE_TYPES

| string dc_processor.ScraperCustomJson.OPTION_SECTION_DATETIME_TEMPLATE_TYPES = 'tags_datetime_template_types' |

Definition at line 75 of file ScraperCustomJson.py.

◆ output_data

| dc_processor.ScraperCustomJson.output_data |

Definition at line 115 of file ScraperCustomJson.py.

◆ outputFormat

| dc_processor.ScraperCustomJson.outputFormat |

Definition at line 110 of file ScraperCustomJson.py.

◆ processedContent

| dc_processor.ScraperCustomJson.processedContent |

Definition at line 109 of file ScraperCustomJson.py.

◆ properties

| dc_processor.ScraperCustomJson.properties |

Definition at line 96 of file ScraperCustomJson.py.

◆ pubdate

| dc_processor.ScraperCustomJson.pubdate |

Definition at line 100 of file ScraperCustomJson.py.

◆ scraperPropFileName

| dc_processor.ScraperCustomJson.scraperPropFileName |

Definition at line 103 of file ScraperCustomJson.py.

◆ scraperResponses

| dc_processor.ScraperCustomJson.scraperResponses |

Definition at line 105 of file ScraperCustomJson.py.

◆ TAGS_DATETIME_NEWS_NAMES

| list dc_processor.ScraperCustomJson.TAGS_DATETIME_NEWS_NAMES = [CONSTS.TAG_PUB_DATE, CONSTS.TAG_DC_DATE] |

Definition at line 67 of file ScraperCustomJson.py.

◆ TAGS_DATETIME_TEMPLATE_TYPES

| list dc_processor.ScraperCustomJson.TAGS_DATETIME_TEMPLATE_TYPES = [CONSTS.TAG_TYPE_DATETIME] |

Definition at line 74 of file ScraperCustomJson.py.

◆ tagsCount

| dc_processor.ScraperCustomJson.tagsCount |

Definition at line 106 of file ScraperCustomJson.py.

◆ tagsMask

| dc_processor.ScraperCustomJson.tagsMask |

Definition at line 107 of file ScraperCustomJson.py.

◆ timezone

| dc_processor.ScraperCustomJson.timezone |

Definition at line 101 of file ScraperCustomJson.py.

◆ urlHost

| dc_processor.ScraperCustomJson.urlHost |

Definition at line 114 of file ScraperCustomJson.py.

◆ usageModel

| dc_processor.ScraperCustomJson.usageModel |

Definition at line 92 of file ScraperCustomJson.py.

◆ useCurrentYear

| dc_processor.ScraperCustomJson.useCurrentYear |

Definition at line 118 of file ScraperCustomJson.py.