319 def getProxy(siteProperties, siteId, url, dbProxyWrapper=None):

321 proxyName = HTTPProxyResolver.__getDefaultProxyName(siteProperties)

323 userProxyJsonWrapper = HTTPProxyResolver.__getUserProxyJsonWrapper(siteProperties)

324 if userProxyJsonWrapper

is not None:

326 fileName = HTTPProxyResolver.__makFileName(userProxyJsonWrapper.getFilePath(), siteId)

327 logger.debug(

"Usage file name: %s", str(fileName))

330 jsonData = HTTPProxyResolver.__readJsonFile(fileName)

331 proxyJsonWrapper = ProxyJsonWrapper(jsonData)

332 logger.debug(

"Read json from index file: %s",

varDump(proxyJsonWrapper.getData()))

335 proxyList = userProxyJsonWrapper.getProxyList()

336 logger.debug(

"Extract proxies list from site property: %s",

varDump(proxyList))

338 proxyJsonWrapper.addProxyList(proxyList)

341 if dbProxyWrapper

is not None and userProxyJsonWrapper.getSource() == UserProxyJsonWrapper.SOURCE_DATABASE:

342 enaibledProxiesList = dbProxyWrapper.getEnaibledProxies(siteId)

343 logger.debug(

"Extract enabled proxies list from DB: %s",

varDump(enaibledProxiesList))

344 proxyJsonWrapper.addProxyList(enaibledProxiesList)

347 fullProxiesList = proxyJsonWrapper.getProxyList()

348 logger.debug(

"Full proxies list: %s",

varDump(fullProxiesList))

349 if len(fullProxiesList) == 0:

350 raise ProxyException(message=HTTPProxyResolver.ERROR_MSG_EMPTY_PROXIES_LIST,

351 statusUpdate=userProxyJsonWrapper.getStatusUpdateEmptyProxyList())

354 proxyList = proxyJsonWrapper.getProxyList(ProxyJsonWrapper.PROXY_STATE_ENABLED)

355 logger.debug(

"Only enabled proxies: %s",

varDump(proxyList))

358 if len(proxyList) == 0:

359 raise ProxyException(message=HTTPProxyResolver.ERROR_MSG_NOT_EXIST_ANY_VALID_PROXY,

360 statusUpdate=userProxyJsonWrapper.getStatusUpdateNoAvailableProxy())

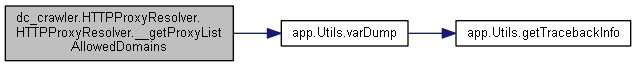

363 proxyList = HTTPProxyResolver.__getProxyListAllowedDomains(proxyList, url)

364 logger.debug(

"Only allowed domains: %s",

varDump(proxyList))

367 proxyList = HTTPProxyResolver.__getProxyListAllowedLimits(proxyList)

368 logger.debug(

"Only allowed limits: %s",

varDump(proxyList))

371 proxyName = HTTPProxyResolver.__usageAlgorithm(proxyList, algorithmType=HTTPProxyResolver.DEFAULT_USAGE_ALGORITM)

373 logger.debug(

"Result proxy name: %s",

varDump(proxyName))

374 if proxyName

is not None:

376 proxyJsonWrapper.addFrequency(proxyName)

379 HTTPProxyResolver.__saveJsonFile(fileName, jsonData)

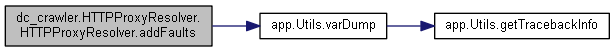

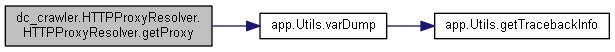

def varDump(obj, stringify=True, strTypeMaxLen=256, strTypeCutSuffix='...', stringifyType=1, ignoreErrors=False, objectsHash=None, depth=0, indent=2, ensure_ascii=False, maxDepth=10)