61 def __init__(self, siteProperties, dbWrapper, siteId, url=None):

62 self.dbWrapper = dbWrapper

64 self.source = self.SOURCE_PROPERTY

65 self.proxyTuple =

None 66 self.proxyStruct =

None 67 self.internalIndexes = {}

68 self.domain = UrlParser.getDomain(url)

if url

is not None else None 69 self.indexFileName =

None 72 self.statusUpdateEmptyProxyList =

None 73 self.statusUpdateNoAvailableProxy =

None 74 self.statusUpdateTriesLimit =

None 76 self.rawContentCheckPatterns =

None 77 self.rawContentCheckRotate = self.RAW_CONTENT_CHECK_ROTATE_DEFAULT

78 self.rawContentCheckFaults = self.RAW_CONTENT_CHECK_FAULTS_DEFAULT

81 if siteProperties

is not None:

82 if "HTTP_PROXY_HOST" in siteProperties

and "HTTP_PROXY_PORT" in siteProperties:

83 self.proxyTuple = (siteProperties[

"HTTP_PROXY_HOST"], siteProperties[

"HTTP_PROXY_PORT"])

84 elif "USER_PROXY" in siteProperties:

86 proxyJson = json.loads(siteProperties[

"USER_PROXY"])

87 except Exception

as excp:

88 ExceptionLog.handler(logger, excp,

">>> Bad json in USER_PROXY property: " + \

89 str(siteProperties[

"USER_PROXY"]))

92 if proxyJson

is not None and "source" in proxyJson:

93 self.source = int(proxyJson[

"source"])

95 if self.source == self.SOURCE_PROPERTY:

96 if "proxies" in proxyJson:

97 self.proxyStruct = proxyJson[

"proxies"]

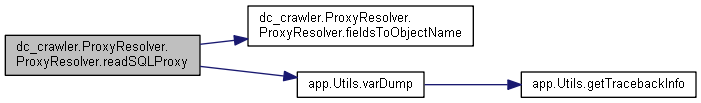

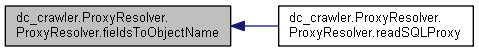

98 elif self.source == self.SOURCE_SQL:

99 self.proxyStruct = self.readSQLProxy(dbWrapper, siteId)

101 logger.debug(

'>>> self.proxyStruct: ' + str(self.proxyStruct))

104 if proxyJson

is not None and "status_update_empty_proxy_list" in proxyJson

and \

105 int(proxyJson[

"status_update_empty_proxy_list"]) >= self.STATUS_UPDATE_MIN_ALLOWED_VALUE

and \

106 int(proxyJson[

"status_update_empty_proxy_list"]) <= self.STATUS_UPDATE_MAX_ALLOWED_VALUE:

107 self.statusUpdateEmptyProxyList = int(proxyJson[

"status_update_empty_proxy_list"])

109 if proxyJson

is not None and "status_update_no_available_proxy" in proxyJson

and \

110 int(proxyJson[

"status_update_no_available_proxy"]) >= self.STATUS_UPDATE_MIN_ALLOWED_VALUE

and \

111 int(proxyJson[

"status_update_no_available_proxy"]) <= self.STATUS_UPDATE_MAX_ALLOWED_VALUE:

112 self.statusUpdateNoAvailableProxy = int(proxyJson[

"status_update_no_available_proxy"])

114 if proxyJson

is not None and "status_update_tries_limit" in proxyJson

and \

115 int(proxyJson[

"status_update_tries_limit"]) >= self.STATUS_UPDATE_MIN_ALLOWED_VALUE

and \

116 int(proxyJson[

"status_update_tries_limit"]) <= self.STATUS_UPDATE_MAX_ALLOWED_VALUE:

117 self.statusUpdateTriesLimit = int(proxyJson[

"status_update_tries_limit"])

120 if proxyJson

is not None and "raw_content_check" in proxyJson:

121 rawContentCheck = proxyJson[

"raw_content_check"]

122 if "patterns" in rawContentCheck:

123 self.rawContentCheckPatterns = rawContentCheck[

"patterns"]

124 if "rotate" in rawContentCheck:

125 self.rawContentCheckRotate = int(rawContentCheck[

"rotate"])

126 if "faults" in rawContentCheck:

127 self.rawContentCheckFaults = int(rawContentCheck[

"faults"])

129 logger.error(

"Mandatory parameter 'patterns' for 'raw_content_check' not found")

131 if self.proxyTuple

is None and proxyJson

is not None:

132 if "file_path" in proxyJson:

133 self.indexFileName = proxyJson[

"file_path"]

134 if siteId

is not None and not (self.indexFileName.find(self.JSON_SUFF) != -1

and \

135 self.indexFileName.find(self.JSON_SUFF) == len(self.indexFileName) - len(self.JSON_SUFF)):

136 if self.indexFileName[-1] !=

'/':

137 self.indexFileName +=

'/' 138 self.indexFileName += siteId

139 self.indexFileName += self.JSON_SUFF

142 self.internalIndexes = self.readIndexFile(self.indexFileName)

147 if self.proxyStruct

is not None:

148 for key

in self.proxyStruct.keys():

149 if key

in self.internalIndexes:

150 self.proxyStruct[key].update(self.internalIndexes[key])

158 except Exception

as err:

159 ExceptionLog.handler(logger, err,

">>> ProxyResolver exception", (), \

160 {ExceptionLog.LEVEL_NAME_ERROR:ExceptionLog.LEVEL_VALUE_DEBUG})

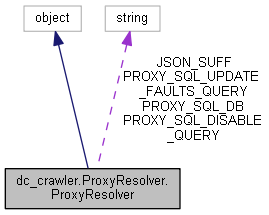

def __init__(self)

constructor