Public Member Functions | |

| def | __init__ (self, dbWrapper=None, siteId=None, usageAlgorithm=DEFAULT_USAGE_ALGORITHM, redirectCodes=None) |

| def | request (self, url, method, timeout, headers, allowRedirects, proxySetting, auth, data, maxRedirects, filters) |

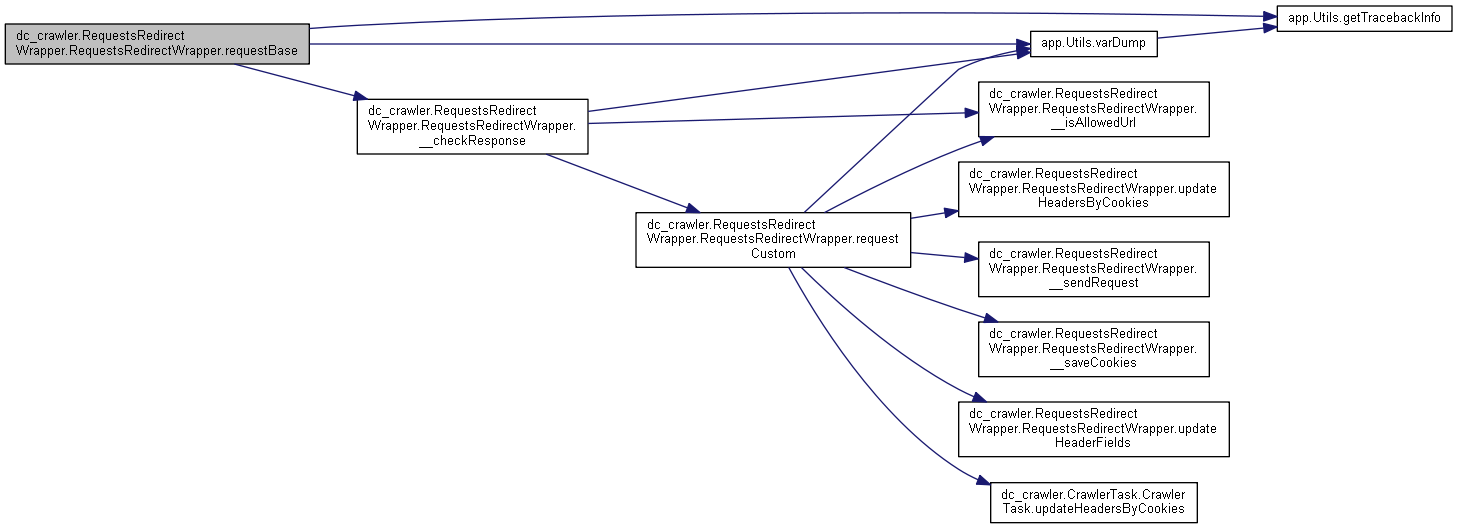

| def | requestBase (self, url, method, timeout, headers, allowRedirects, proxySetting, auth, data, maxRedirects, filters) |

| def | requestCustom (self, url, method, timeout, headers, allowRedirects, proxySetting, auth, data, maxRedirects, filters) |

Static Public Member Functions | |

| def | updateHeaderFields (headers) |

| def | updateHeadersByCookies (headers, url, cookieResolver, stage=HTTPCookieResolver.STAGE_DEFAULT) |

| def | checkRedirectMax (handler, args, kwargs) |

| def | checkRedirect (r, args, kwargs) |

Public Attributes | |

| dbWrapper | |

| siteId | |

| usageAlgorithm | |

| redirectCodes | |

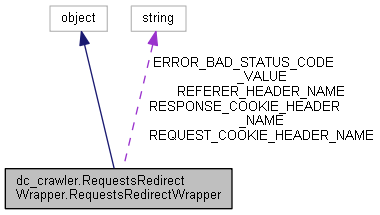

Static Public Attributes | |

| string | REQUEST_COOKIE_HEADER_NAME = 'Cookie' |

| string | RESPONSE_COOKIE_HEADER_NAME = 'set-cookie' |

| string | REFERER_HEADER_NAME = 'Referer' |

| int | USAGE_ALGORITHM_BASE = 0 |

| int | USAGE_ALGORITHM_CUSTOM = 1 |

| int | DEFAULT_USAGE_ALGORITHM = USAGE_ALGORITHM_BASE |

| string | ERROR_BAD_STATUS_CODE_VALUE = "Not allowed status code '%s'. Allowed list: %s" |

Private Member Functions | |

| def | __checkResponse (self, res, filters) |

| def | __sendRequest (self, url, method, timeout, headers, proxySetting, auth, data, maxRedirects) |

| def | __isAllowedUrl (self, url, inputFilters=None) |

| def | __saveCookies (self, url, res, cookieResolver) |

Detailed Description

Definition at line 32 of file RequestsRedirectWrapper.py.

Constructor & Destructor Documentation

◆ __init__()

| def dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.__init__ | ( | self, | |

dbWrapper = None, |

|||

siteId = None, |

|||

usageAlgorithm = DEFAULT_USAGE_ALGORITHM, |

|||

redirectCodes = None |

|||

| ) |

Definition at line 47 of file RequestsRedirectWrapper.py.

Member Function Documentation

◆ __checkResponse()

|

private |

Definition at line 166 of file RequestsRedirectWrapper.py.

◆ __isAllowedUrl()

|

private |

Definition at line 312 of file RequestsRedirectWrapper.py.

◆ __saveCookies()

|

private |

Definition at line 374 of file RequestsRedirectWrapper.py.

◆ __sendRequest()

|

private |

Definition at line 254 of file RequestsRedirectWrapper.py.

◆ checkRedirect()

|

static |

Definition at line 426 of file RequestsRedirectWrapper.py.

◆ checkRedirectMax()

|

static |

Definition at line 411 of file RequestsRedirectWrapper.py.

◆ request()

| def dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.request | ( | self, | |

| url, | |||

| method, | |||

| timeout, | |||

| headers, | |||

| allowRedirects, | |||

| proxySetting, | |||

| auth, | |||

| data, | |||

| maxRedirects, | |||

| filters | |||

| ) |

Definition at line 66 of file RequestsRedirectWrapper.py.

◆ requestBase()

| def dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.requestBase | ( | self, | |

| url, | |||

| method, | |||

| timeout, | |||

| headers, | |||

| allowRedirects, | |||

| proxySetting, | |||

| auth, | |||

| data, | |||

| maxRedirects, | |||

| filters | |||

| ) |

Definition at line 106 of file RequestsRedirectWrapper.py.

◆ requestCustom()

| def dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.requestCustom | ( | self, | |

| url, | |||

| method, | |||

| timeout, | |||

| headers, | |||

| allowRedirects, | |||

| proxySetting, | |||

| auth, | |||

| data, | |||

| maxRedirects, | |||

| filters | |||

| ) |

Definition at line 197 of file RequestsRedirectWrapper.py.

◆ updateHeaderFields()

|

static |

Definition at line 295 of file RequestsRedirectWrapper.py.

◆ updateHeadersByCookies()

|

static |

Definition at line 391 of file RequestsRedirectWrapper.py.

Member Data Documentation

◆ dbWrapper

| dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.dbWrapper |

Definition at line 49 of file RequestsRedirectWrapper.py.

◆ DEFAULT_USAGE_ALGORITHM

|

static |

Definition at line 41 of file RequestsRedirectWrapper.py.

◆ ERROR_BAD_STATUS_CODE_VALUE

|

static |

Definition at line 44 of file RequestsRedirectWrapper.py.

◆ redirectCodes

| dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.redirectCodes |

Definition at line 52 of file RequestsRedirectWrapper.py.

◆ REFERER_HEADER_NAME

|

static |

Definition at line 36 of file RequestsRedirectWrapper.py.

◆ REQUEST_COOKIE_HEADER_NAME

|

static |

Definition at line 34 of file RequestsRedirectWrapper.py.

◆ RESPONSE_COOKIE_HEADER_NAME

|

static |

Definition at line 35 of file RequestsRedirectWrapper.py.

◆ siteId

| dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.siteId |

Definition at line 50 of file RequestsRedirectWrapper.py.

◆ USAGE_ALGORITHM_BASE

|

static |

Definition at line 39 of file RequestsRedirectWrapper.py.

◆ USAGE_ALGORITHM_CUSTOM

|

static |

Definition at line 40 of file RequestsRedirectWrapper.py.

◆ usageAlgorithm

| dc_crawler.RequestsRedirectWrapper.RequestsRedirectWrapper.usageAlgorithm |

Definition at line 51 of file RequestsRedirectWrapper.py.

The documentation for this class was generated from the following file:

- sources/hce/dc_crawler/RequestsRedirectWrapper.py