48 def __init__(self, config, templ=None, domain=None, processorProperties=None):

49 BaseExtractor.__init__(self, config, templ, domain, processorProperties)

50 logger.debug(

"Properties: %s",

varDump(self.properties))

53 self.rankReading(self.__class__.__name__)

56 if processorProperties

is not None and CONSTS.TAG_CLOSE_VOID_PROP_NAME

in processorProperties

and \

57 processorProperties[CONSTS.TAG_CLOSE_VOID_PROP_NAME]

is not None:

58 self.closeVoid = int(processorProperties[CONSTS.TAG_CLOSE_VOID_PROP_NAME])

60 self.keepAttributes =

None 61 if processorProperties

is not None and CONSTS.TAG_KEEP_ATTRIBUTES_PROP_NAME

in processorProperties

and \

62 processorProperties[CONSTS.TAG_KEEP_ATTRIBUTES_PROP_NAME]

is not None:

63 self.keepAttributes = {}

64 for key

in processorProperties[CONSTS.TAG_KEEP_ATTRIBUTES_PROP_NAME]:

65 self.keepAttributes[key.lower()] = processorProperties[CONSTS.TAG_KEEP_ATTRIBUTES_PROP_NAME][key]

67 if processorProperties

is not None and CONSTS.TAG_MARKUP_PROP_NAME

in processorProperties

and \

68 processorProperties[CONSTS.TAG_MARKUP_PROP_NAME]

is not None:

69 self.innerTextTagReplacers = {}

70 for key

in processorProperties[CONSTS.TAG_MARKUP_PROP_NAME]:

71 self.innerTextTagReplacers[key.lower()] = processorProperties[CONSTS.TAG_MARKUP_PROP_NAME][key]

73 self.innerTextTagReplacers =

None 75 self.name = self.SELF_NAME

76 self.data[

"extractor"] = self.SELF_NAME

83 if processorProperties

is not None and "SCRAPER_SCRAPY_PRECONFIGURED" in processorProperties:

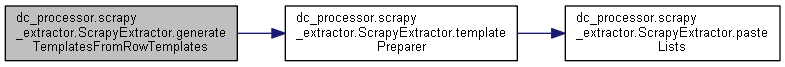

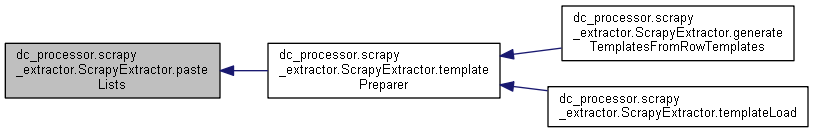

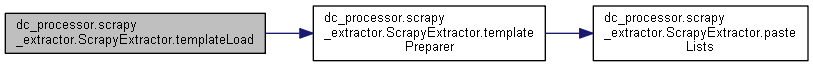

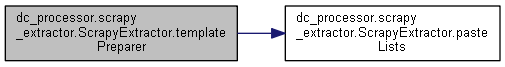

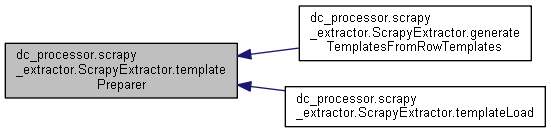

84 self.templates = self.generateTemplatesFromRowTemplates(json.loads(processorProperties\

85 [

"SCRAPER_SCRAPY_PRECONFIGURED"]), domain)

87 self.templates = [{self.SELF_NAME +

"_default": self.templateLoad(config, templ, domain)}]

90 defaultConfigTemplate = config.get(

"Application",

"default_template",

None)

91 except ConfigParser.NoOptionError:

92 defaultConfigTemplate =

None 93 if defaultConfigTemplate

is not None:

94 logger.debug(

">>> Extend Templates with config default template")

95 tempTemplates = self.generateTemplatesFromRowTemplates(json.loads(defaultConfigTemplate), domain)

96 if len(tempTemplates) > 0:

98 for templeteElemConfig

in tempTemplates:

99 for templeteElemProperty

in self.templates:

100 for templeteKeyProperty

in templeteElemProperty:

101 if templeteKeyProperty

in templeteElemConfig:

102 templeteElemConfig =

None 104 if templeteElemConfig

is None:

106 if templeteElemConfig

is not None:

107 newTemplates.append(templeteElemConfig)

108 self.templates = self.templates + newTemplates

109 self.blockedByXpathTags = []

110 logger.debug(

"!!! INIT Template Domain: '%s'", str(domain))

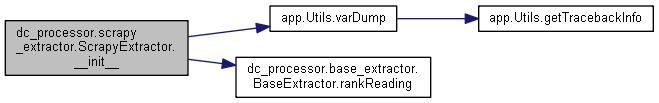

def varDump(obj, stringify=True, strTypeMaxLen=256, strTypeCutSuffix='...', stringifyType=1, ignoreErrors=False, objectsHash=None, depth=0, indent=2, ensure_ascii=False, maxDepth=10)

def __init__(self)

constructor

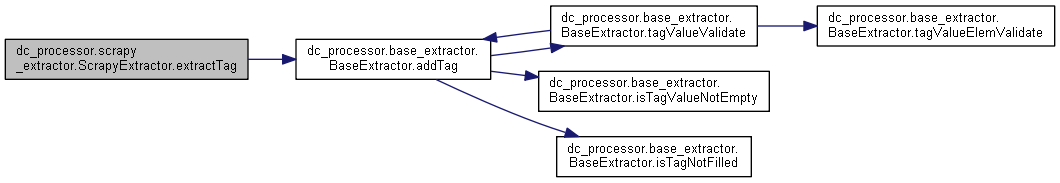

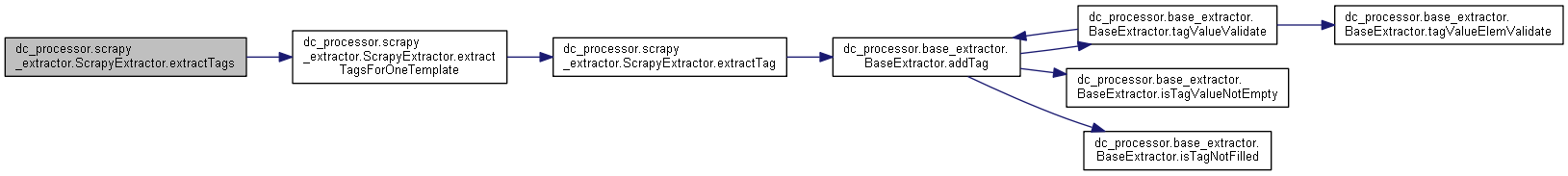

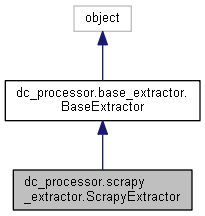

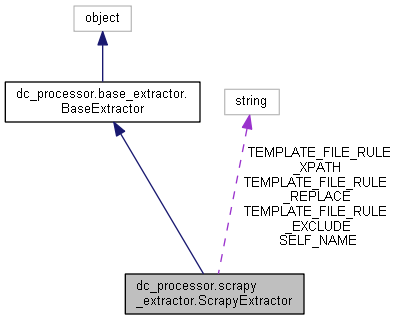

Public Member Functions inherited from dc_processor.base_extractor.BaseExtractor

Public Member Functions inherited from dc_processor.base_extractor.BaseExtractor Public Attributes inherited from dc_processor.base_extractor.BaseExtractor

Public Attributes inherited from dc_processor.base_extractor.BaseExtractor Static Public Attributes inherited from dc_processor.base_extractor.BaseExtractor

Static Public Attributes inherited from dc_processor.base_extractor.BaseExtractor