dc_crawler.Fetcher.RequestsFetcher Class Reference

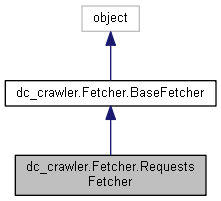

Inheritance diagram for dc_crawler.Fetcher.RequestsFetcher:

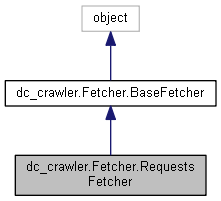

Collaboration diagram for dc_crawler.Fetcher.RequestsFetcher:

Public Member Functions | |

| def | __init__ (self, dbWrapper=None, siteId=None) |

| def | open (self, url, method='get', headers=None, timeout=100, allow_redirects=True, proxies=None, auth=None, data=None, log=None, allowed_content_types=None, max_resource_size=None, max_redirects=CONSTS.MAX_HTTP_REDIRECTS_LIMIT, filters=None, executable_path=None, depth=None, macro=None) |

| def | fixWrongXMLHeader (self, contentStr) |

Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher | |

| def | __init__ (self) |

| def | open (self, url, method='get', headers=None, timeout=100, allow_redirects=True, proxies=None, auth=None, data=None, log=None, allowed_content_types=None, max_resource_size=None, max_redirects=CONSTS.MAX_HTTP_REDIRECTS_LIMIT, filters=None, executable_path=None, depth=None, macro=None) |

| def | should_have_meta_res (self) |

| def | getDomainNameFromURL (self, url, default='') |

Public Attributes | |

| dbWrapper | |

| siteId | |

Public Attributes inherited from dc_crawler.Fetcher.BaseFetcher Public Attributes inherited from dc_crawler.Fetcher.BaseFetcher | |

| connectionTimeout | |

| logger | |

Additional Inherited Members | |

Static Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher Static Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher | |

| def | init (dbWrapper=None, siteId=None) |

| def | get_fetcher (typ, dbWrapper=None, siteId=None) |

Static Public Attributes inherited from dc_crawler.Fetcher.BaseFetcher Static Public Attributes inherited from dc_crawler.Fetcher.BaseFetcher | |

| fetchers = None | |

| int | TYP_NORMAL = 1 |

| int | TYP_DYNAMIC = 2 |

| int | TYP_URLLIB = 5 |

| int | TYP_CONTENT = 6 |

| int | TYP_AUTO = 7 |

| float | CONNECTION_TIMEOUT = 1.0 |

Detailed Description

Definition at line 165 of file Fetcher.py.

Constructor & Destructor Documentation

◆ __init__()

| def dc_crawler.Fetcher.RequestsFetcher.__init__ | ( | self, | |

dbWrapper = None, |

|||

siteId = None |

|||

| ) |

Definition at line 167 of file Fetcher.py.

Member Function Documentation

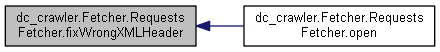

◆ fixWrongXMLHeader()

| def dc_crawler.Fetcher.RequestsFetcher.fixWrongXMLHeader | ( | self, | |

| contentStr | |||

| ) |

◆ open()

| def dc_crawler.Fetcher.RequestsFetcher.open | ( | self, | |

| url, | |||

method = 'get', |

|||

headers = None, |

|||

timeout = 100, |

|||

allow_redirects = True, |

|||

proxies = None, |

|||

auth = None, |

|||

data = None, |

|||

log = None, |

|||

allowed_content_types = None, |

|||

max_resource_size = None, |

|||

max_redirects = CONSTS.MAX_HTTP_REDIRECTS_LIMIT, |

|||

filters = None, |

|||

executable_path = None, |

|||

depth = None, |

|||

macro = None |

|||

| ) |

Definition at line 201 of file Fetcher.py.

295 if (impl_res.encoding is None) or ((encoding is None) and (impl_res.encoding not in ct and "xml" not in ct)):

329 except (requests.exceptions.Timeout, requests.exceptions.ReadTimeout, requests.exceptions.ConnectTimeout), err:

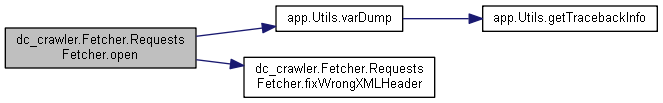

def varDump(obj, stringify=True, strTypeMaxLen=256, strTypeCutSuffix='...', stringifyType=1, ignoreErrors=False, objectsHash=None, depth=0, indent=2, ensure_ascii=False, maxDepth=10)

Definition: Utils.py:410

Here is the call graph for this function:

Member Data Documentation

◆ dbWrapper

| dc_crawler.Fetcher.RequestsFetcher.dbWrapper |

Definition at line 170 of file Fetcher.py.

◆ siteId

| dc_crawler.Fetcher.RequestsFetcher.siteId |

Definition at line 171 of file Fetcher.py.

The documentation for this class was generated from the following file:

- sources/hce/dc_crawler/Fetcher.py