607 def openT(self, url, headers, timeout, proxies, executable_path, macro):

608 startTime = time.time()

613 if self.inlineURLMacroDelimiter

in url:

614 t = url.split(self.inlineURLMacroDelimiter)

619 from selenium

import webdriver

620 import selenium.webdriver.support.ui

621 except Exception, err:

622 msg =

'Selenium module import error: ' + str(err)

623 if self.logger

is not None:

624 self.logger.

error(msg)

625 raise SeleniumFetcherException(msg)

627 if self.logger

is not None:

629 from selenium.webdriver.remote.remote_connection

import LOGGER

as seleniumLogger

630 seleniumLogger.setLevel(self.logger.getEffectiveLevel())

632 selenium_logger = logging.getLogger(

'selenium.webdriver.remote.remote_connection')

635 selenium_logger.setLevel(self.logger.getEffectiveLevel())

639 driver_name =

"chromedriver" 643 page_source_macro =

None 644 content_type_macro =

None 645 result_type_macro = self.MACRO_RESULT_TYPE_DEFAULT

646 fatalErrors = [self.ERROR_FATAL, self.ERROR_GENERAL, self.ERROR_NAME_NOT_RESOLVED, self.ERROR_TOO_MANY_REDIRECTS,

647 self.ERROR_PROXY_CONNECTION_FAILED, self.ERROR_CONNECTION_TIMED_OUT, self.ERROR_CONFLICT,

648 self.ERROR_TUNNEL_CONNECTION_FAILED, self.ERROR_EMPTY_RESPONSE, self.ERROR_SERVICE_UNAVAILABLE]

655 envVars = {

"DISPLAY":

"",

"LANG":

"en_US.UTF-8"}

656 for varName

in envVars:

657 v = os.getenv(varName,

"")

658 if varName ==

"DISPLAY":

660 raise SeleniumFetcherException(

"Environment variable 'DISPLAY' is not set!")

662 if v != envVars[varName]:

663 raise SeleniumFetcherException(

"Environment variable '" + varName +

"' value expected:'" + \

664 envVars[varName] +

"', got from os: '" + v +

"'; all env: " + \

670 chrome_option = self.getOptions(webdriver, headers, proxies, url)

673 if executable_path

is None:

674 path = exec_path + driver_name + str(ctypes.sizeof(ctypes.c_voidp) * 8)

676 path = executable_path

677 if self.logger

is not None:

678 self.logger.debug(

"Chrome driver executable path: %s, options: %s", str(path), str(chrome_option.arguments))

679 from selenium.webdriver.common.desired_capabilities

import DesiredCapabilities

681 d = DesiredCapabilities.CHROME

682 d[

'loggingPrefs'] = {

'browser':

'ALL'}

684 self.driver = webdriver.Chrome(executable_path=path, chrome_options=chrome_option, desired_capabilities=d)

685 self.driverPid = self.driver.service.process.pid

687 self.logger.debug(

"Driver pid: " + str(self.driverPid))

688 except Exception, err:

689 error_msg =

'Driver initialization error: ' + str(err)

690 error_code = self.ERROR_FATAL

692 error_msg =

'General driver initialization!' 693 error_code = self.ERROR_GENERAL

696 if self.logger

is not None:

697 self.logger.

error(

'Fatal error: ' + error_msg)

698 raise SeleniumFetcherException(error_msg)

706 if self.logger

is not None:

707 self.logger.debug(

"Chrome driver get url: `%s`", str(url))

710 log_types = self.driver.log_types

711 if 'browser' in log_types:

712 log_list = self.driver.get_log(

'browser')

713 if self.logger

is not None:

714 self.logger.debug(

"Driver logs: " + str(log_list))

715 for item_dict

in log_list:

716 if self.logger

is not None:

717 self.logger.debug(

"Driver message: `%s`", str(item_dict[

"message"]))

718 if "message" in item_dict

and ((url +

' ')

in item_dict[

"message"]

or (url +

'/ ')

in item_dict[

"message"]):

719 error_msg += item_dict[

"message"] +

" | " 721 if self.logger

is not None:

722 self.logger.

error(

"No driver logs!")

725 (

r"(.*)net::ERR_NAME_NOT_RESOLVED(.*)", self.ERROR_NAME_NOT_RESOLVED),

726 (

r"(.*)net::ERR_TOO_MANY_REDIRECTS(.*)", self.ERROR_TOO_MANY_REDIRECTS),

727 (

r"(.*)ERR_PROXY_CONNECTION_FAILED(.*)", self.ERROR_PROXY_CONNECTION_FAILED),

728 (

r"(.*)net::ERR_CONNECTION_TIMED_OUT(.*)", self.ERROR_CONNECTION_TIMED_OUT),

729 (

r"(.*)net::ERR_TUNNEL_CONNECTION_FAILED(.*)", self.ERROR_TUNNEL_CONNECTION_FAILED),

730 (

r"(.*)net::ERR_CONNECTION_RESET(.*)", self.ERROR_TUNNEL_CONNECTION_FAILED),

731 (

r"(.*)net::ERR_INVALID_URL(.*)", self.ERROR_TUNNEL_CONNECTION_FAILED),

732 (

r"(.*)net::ERR_EMPTY_RESPONSE(.*)", self.ERROR_EMPTY_RESPONSE),

733 (

r"(.*)" + self.LOG_MESSAGE_RENDERRER_TIMEOUT +

r"(.*)", self.ERROR_CONNECTION_TIMED_OUT),

734 (

r"(.*)" + self.LOG_MESSAGE_SERVER_RESPONSE_503 +

r"(.*)", self.ERROR_SERVICE_UNAVAILABLE),

735 (

r"(.*)" + self.LOG_MESSAGE_SERVER_RESPONSE_409 +

r"(.*)", self.ERROR_CONFLICT),

736 (

r"(.*)403 \(Forbidden\)(.*)", 403),

737 (

r"(.*)404 \(Not Found\)(.*)", 404),

738 (

r"(.*)500 \(Internal Server Error\)(.*)", 500),

739 (

r"(.*)net::(.*)", 520)]

740 for item

in entrances:

741 regex = re.compile(item[0])

742 r = regex.search(error_msg)

745 if self.logger

is not None:

746 self.logger.debug(

"Page error: " + error_msg)

748 if error_code

not in fatalErrors

and inlineMacro !=

'':

749 if self.logger

is not None:

750 self.logger.debug(

"Execute inline macro: %s", str(inlineMacro))

751 macroResults, errorCode, errorMsg = self.execMacroSimple([inlineMacro])

752 if error_code

not in fatalErrors

and macro

is not None:

753 if self.logger

is not None:

754 self.logger.debug(

"Execute macro: %s", str(macro))

755 if isinstance(macro, list):

756 macroResults, errorCode, errorMsg = self.execMacroSimple(macro)

758 macroResults, errorCode, errorMsg, content_type_macro, result_type_macro = self.execMacroExtended(macro)

760 error_code_macro |= APP_CONSTS.ERROR_MACRO

762 if len(macroResults) > 0:

763 if result_type_macro == self.MACRO_RESULT_TYPE_CONTENT:

764 page_source_macro = macroResults

766 page_source_macro = json.dumps(macroResults, ensure_ascii=

False)

767 except Exception, err:

768 error_msg =

'Driver error: ' + str(err) +

'; logs: ' + self.getAllLogsAsString()

769 error_code = self.ERROR_FATAL

771 error_msg =

"General driver usage error!" 772 error_code = self.ERROR_GENERAL

776 if self.logger

is not None:

777 self.logger.debug(

"Wait on damping timeout to load all dynamic parts of the page: %s sec", str(timeout))

780 elif error_code

in fatalErrors:

781 if self.logger

is not None:

782 self.logger.debug(

"Fatal error, code: %s, msg: %s", str(error_code), error_msg)

783 if error_code == self.ERROR_NAME_NOT_RESOLVED:

784 code = APP_CONSTS.ERROR_FETCH_INVALID_URL

785 elif error_code == self.ERROR_TOO_MANY_REDIRECTS:

786 code = APP_CONSTS.ERROR_FETCH_TOO_MANY_REDIRECTS

787 elif error_code == self.ERROR_PROXY_CONNECTION_FAILED:

788 code = APP_CONSTS.ERROR_FETCH_CONNECTION_ERROR

789 elif error_code == self.ERROR_CONNECTION_TIMED_OUT:

790 code = APP_CONSTS.ERROR_FETCH_CONNECTION_TIMEOUT

791 elif error_code == self.ERROR_TUNNEL_CONNECTION_FAILED:

792 code = APP_CONSTS.ERROR_FETCH_FORBIDDEN

793 elif error_code == self.ERROR_EMPTY_RESPONSE:

794 code = APP_CONSTS.ERROR_EMPTY_RESPONSE

795 elif error_code == self.ERROR_SERVICE_UNAVAILABLE:

796 code = APP_CONSTS.ERROR_FETCH_FORBIDDEN

797 elif error_code == self.ERROR_CONFLICT:

798 code = APP_CONSTS.ERROR_FETCH_HTTP_ERROR

800 code = APP_CONSTS.ERROR_FETCHER_INTERNAL

802 raise SeleniumFetcherException(error_msg, code)

807 page_source = self.driver.page_source

808 cookies = self.driver.get_cookies()

809 except Exception, err:

811 error_code = self.ERROR_CONTENT_OR_COOKIE

813 error_msg =

"Content and cookies get error!" 814 error_code = self.ERROR_CONTENT_OR_COOKIE

819 attr = self.driver.find_element_by_xpath(

".//meta[translate(@http-equiv,'ABCDEFGHIJKLMNOPQRSTUVWXYZ','abcdefghijklmnopqrstuvwxyz')='content-type']").get_attribute(

"content")

820 regex = re.compile(

r"(.*); charset=(.*)", re.IGNORECASE)

821 items = regex.search(attr)

822 if items

is not None:

823 items = items.groups()

825 content_type = items[0]

827 except Exception, err:

829 if content_type

is None:

831 attr = self.driver.find_element_by_xpath(

'//html')

832 content_type = self.CONTENT_TYPE_HTML

833 except Exception, err:

835 if content_type

is not None and charset

is None:

837 charset = self.driver.find_element_by_xpath(

'//meta[@charset]').get_attribute(

"charset")

838 except Exception, err:

842 charset = self.driver.execute_script(

"return document.characterSet;")

843 except Exception, err:

844 if self.logger

is not None:

845 self.logger.debug(

"Charset detection error: %s", str(err))

848 current_url = self.driver.current_url

849 except Exception, err:

851 if self.logger

is not None:

852 self.logger.debug(

"Get 'current_url' error: %s, input url assumed: %s", str(err), str(url))

860 res.url = current_url

861 if error_code > 100

or error_code == self.ERROR_FATAL:

862 res.status_code = error_code

864 res.status_code = 200

866 if page_source_macro

is None:

867 res.unicode_content = page_source

869 res.unicode_content = page_source_macro

870 res.str_content = res.unicode_content

871 res.rendered_unicode_content = res.unicode_content

872 res.content_size = len(res.unicode_content)

873 res.encoding = charset

874 res.headers = {

'content-length': res.content_size}

875 if page_source_macro

is not None:

876 if content_type_macro

is not None:

877 content_type = content_type_macro

879 content_type = self.CONTENT_TYPE_JSON

880 if content_type

is not None:

881 res.headers[

'content-type'] = content_type

882 if current_url != url:

883 res.headers[

'location'] = current_url

884 res.meta_res = res.unicode_content

885 res.cookies = cookies

886 res.dynamic_fetcher_type = driver_name

887 res.dynamic_fetcher_result_type = result_type_macro

888 if error_code_macro != APP_CONSTS.ERROR_OK:

889 res.error_mask |= error_code_macro

890 res.time = time.time() - startTime

891 res.request = {

'headers':headers}

892 res.error_msg = error_msg

893 except Exception, err:

894 msg =

'Response fill error: ' + str(err)

895 if self.logger

is not None:

896 self.logger.

error(msg)

897 raise SeleniumFetcherException(msg)

899 if self.logger

is not None and error_msg !=

"":

900 self.logger.debug(

"Dynamic fetcher none fatal error: " + error_msg)

904 except Exception, err:

905 msg =

'Unrecognized dynamic fetcher error: ' + str(err)

906 if self.logger

is not None:

907 self.logger.

error(msg)

908 raise SeleniumFetcherException(msg)

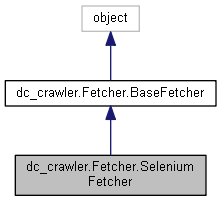

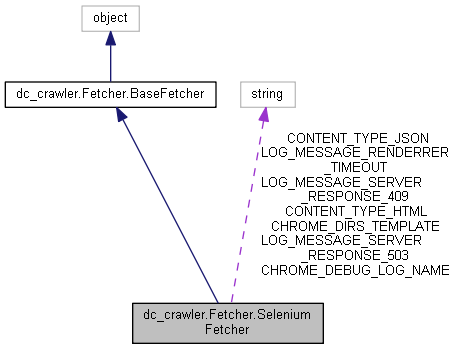

Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher

Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher Public Attributes inherited from dc_crawler.Fetcher.BaseFetcher

Public Attributes inherited from dc_crawler.Fetcher.BaseFetcher Static Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher

Static Public Member Functions inherited from dc_crawler.Fetcher.BaseFetcher