Classes | |

| class | Meta |

Public Member Functions | |

| def | __init__ (self, usageModel=APP_CONSTS.APP_USAGE_MODEL_PROCESS, configFile=None, logger=None, inputData=None) |

| def | setup (self) |

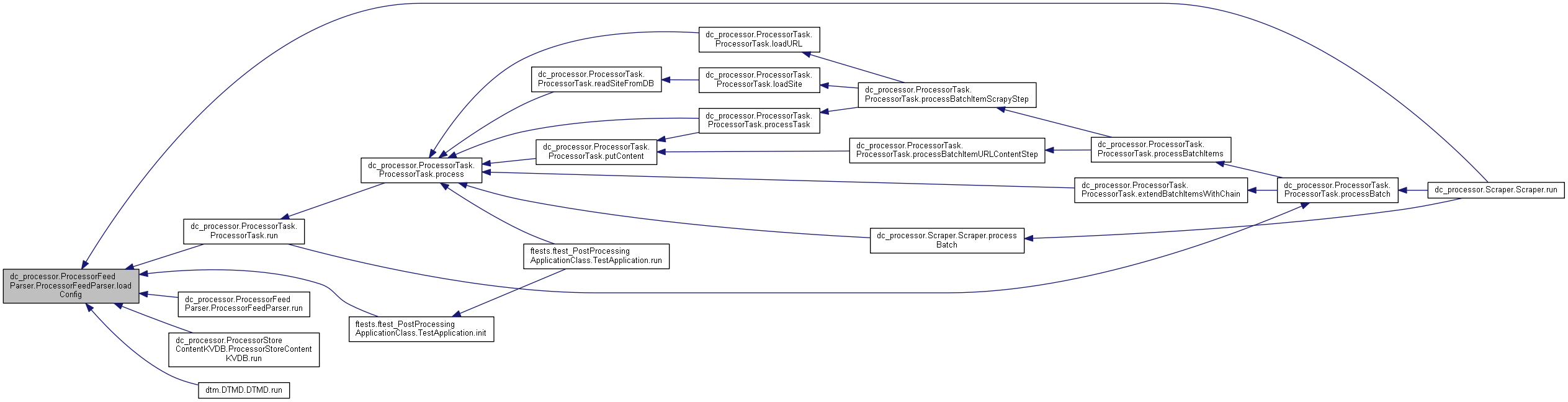

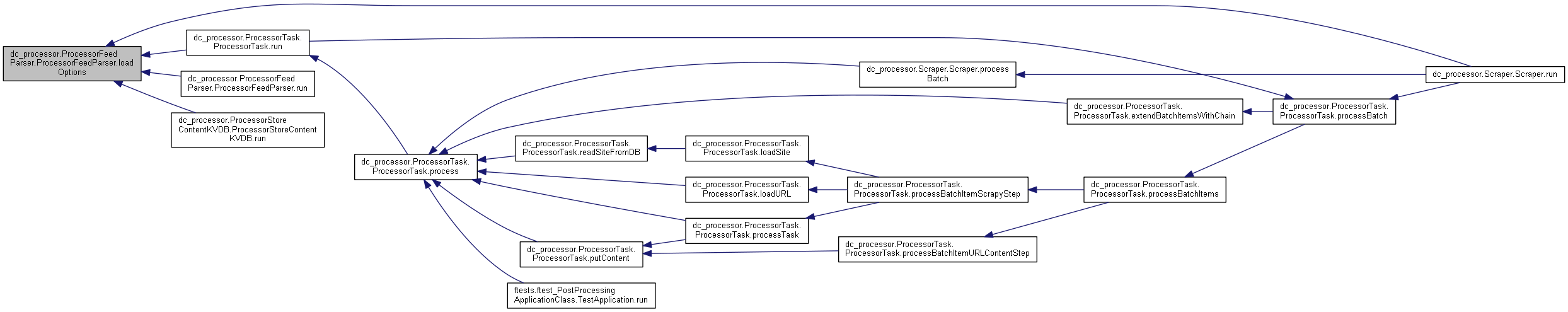

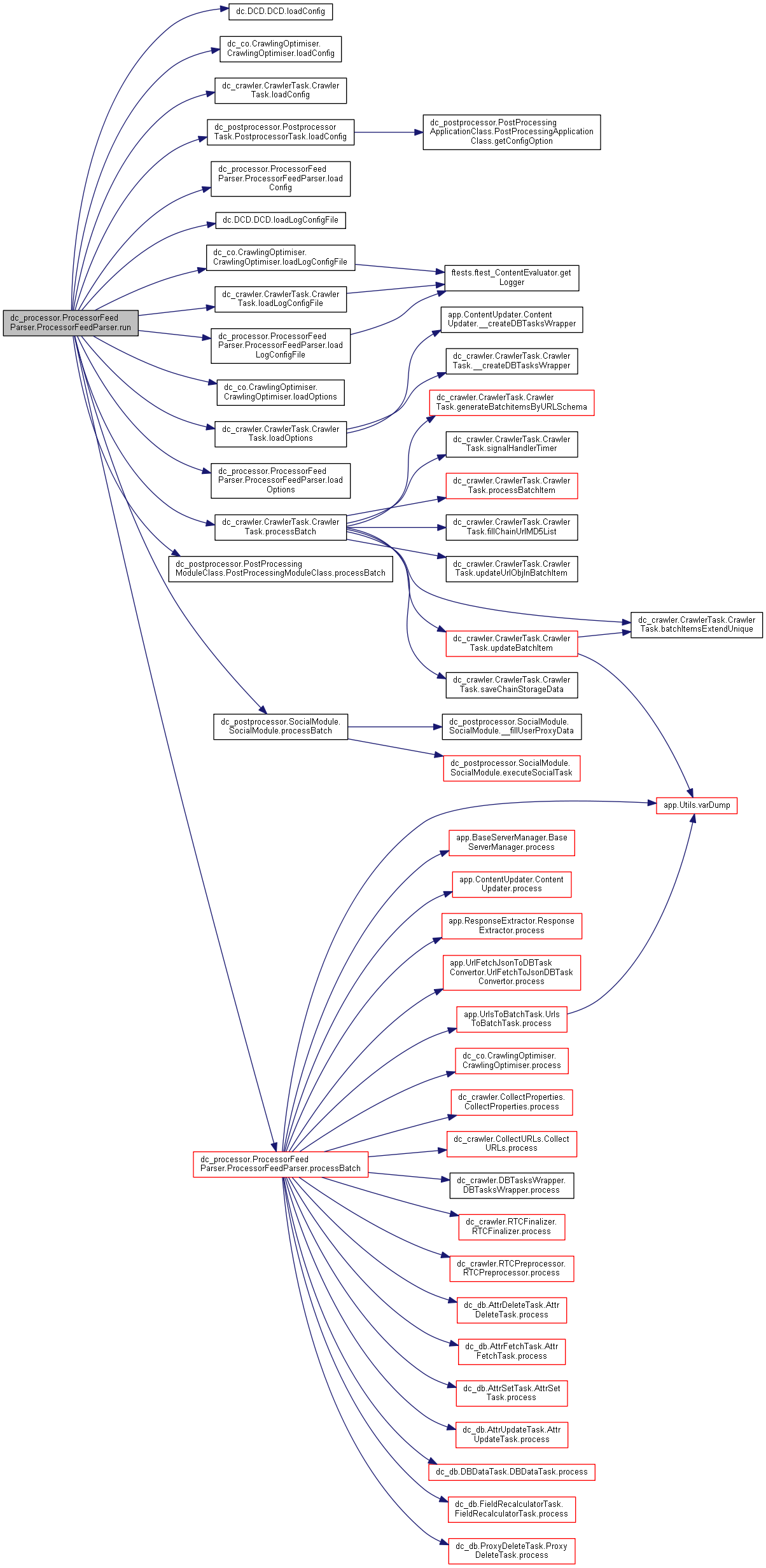

| def | run (self) |

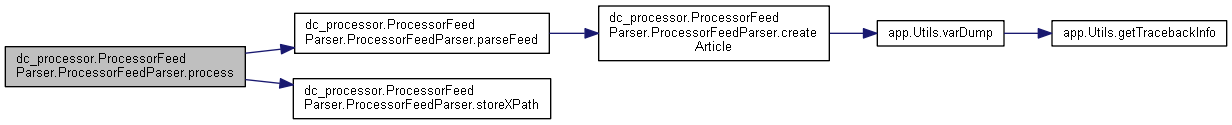

| def | createArticle (self) |

| def | parseFeed (self) |

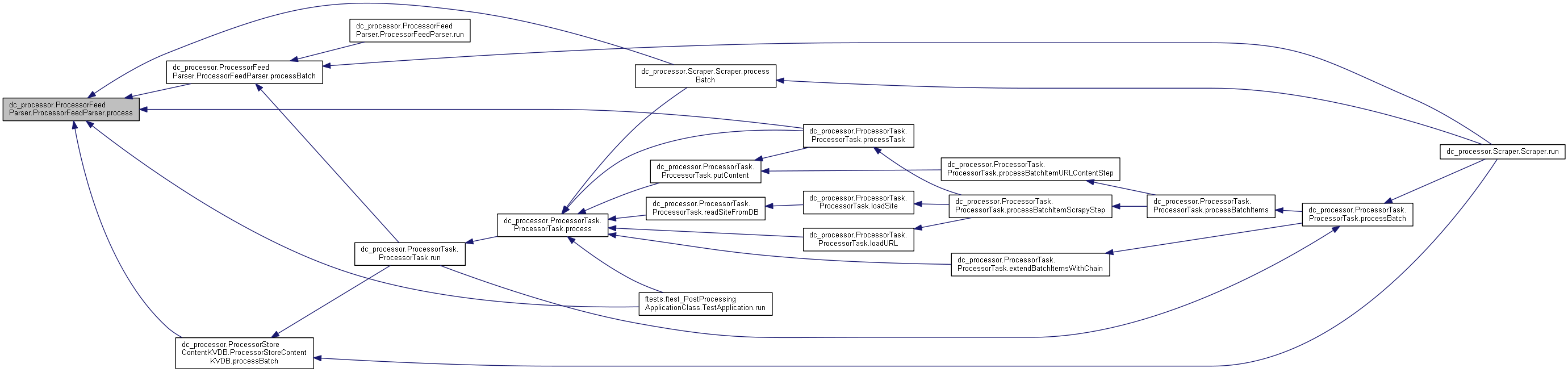

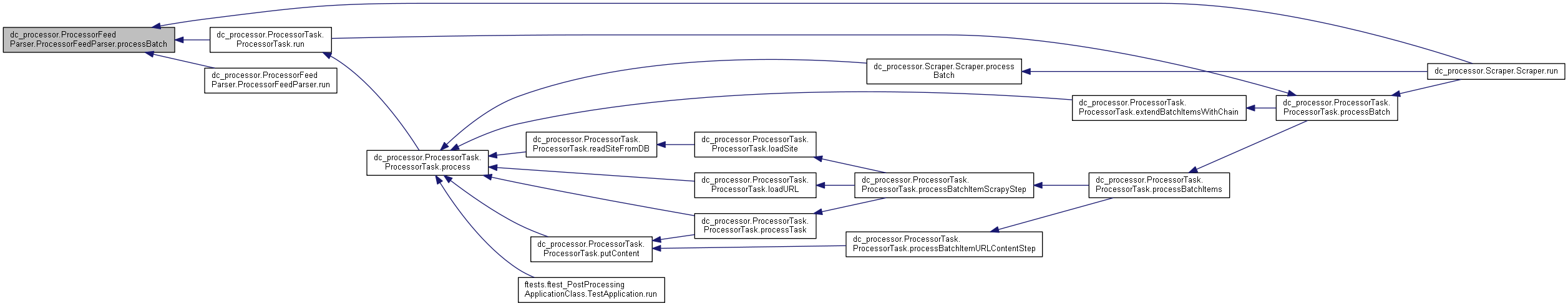

| def | process (self) |

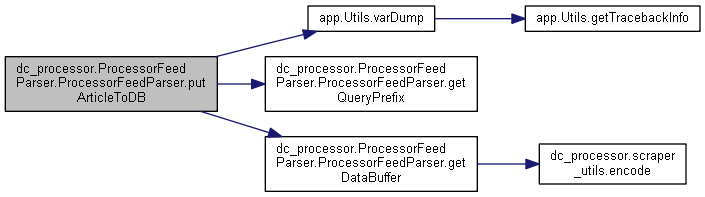

| def | getQueryPrefix (self) |

| For. More... | |

| def | getDataBuffer (self, data) |

| getDataBuffer prepare data buffer More... | |

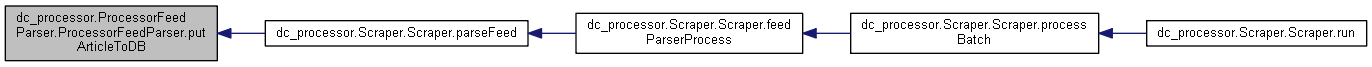

| def | putArticleToDB (self, result) |

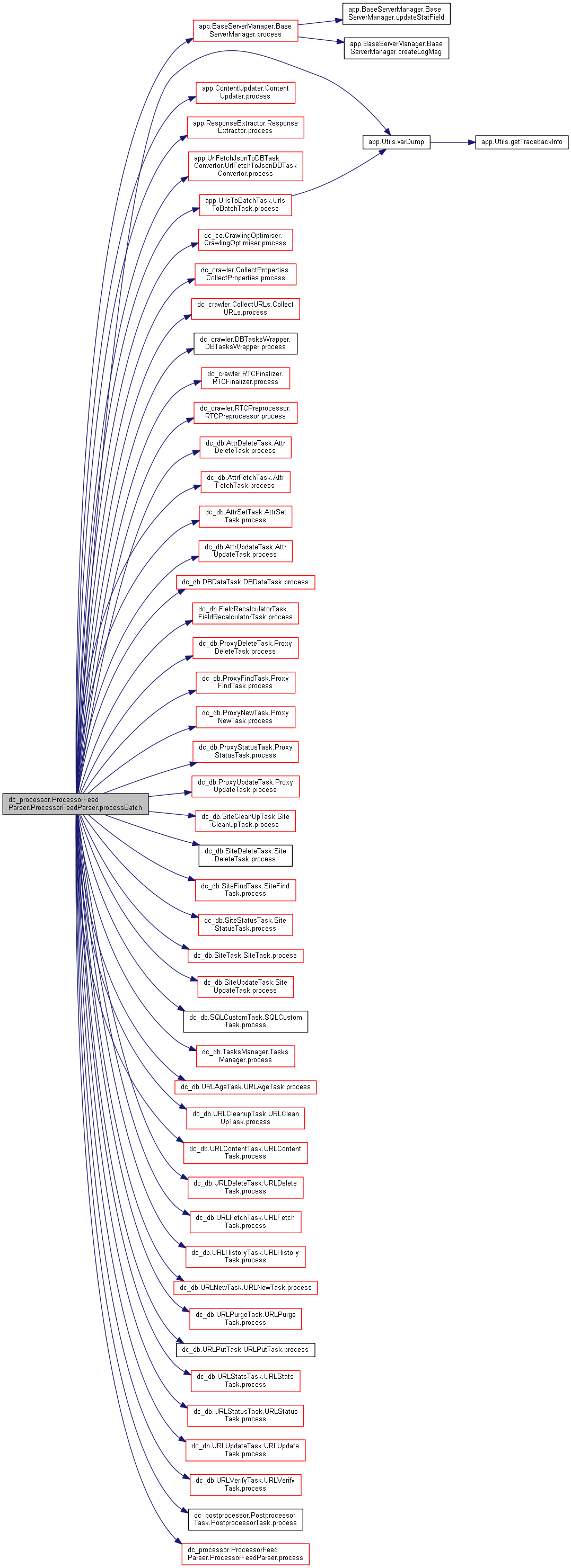

| def | processBatch (self) |

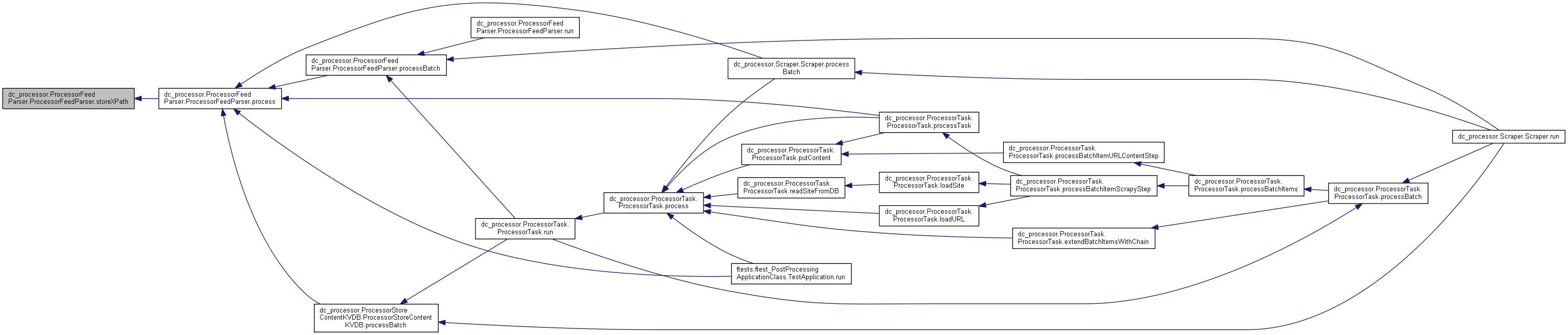

| def | storeXPath (self) |

| storeXpath More... | |

| def | loadConfig (self) |

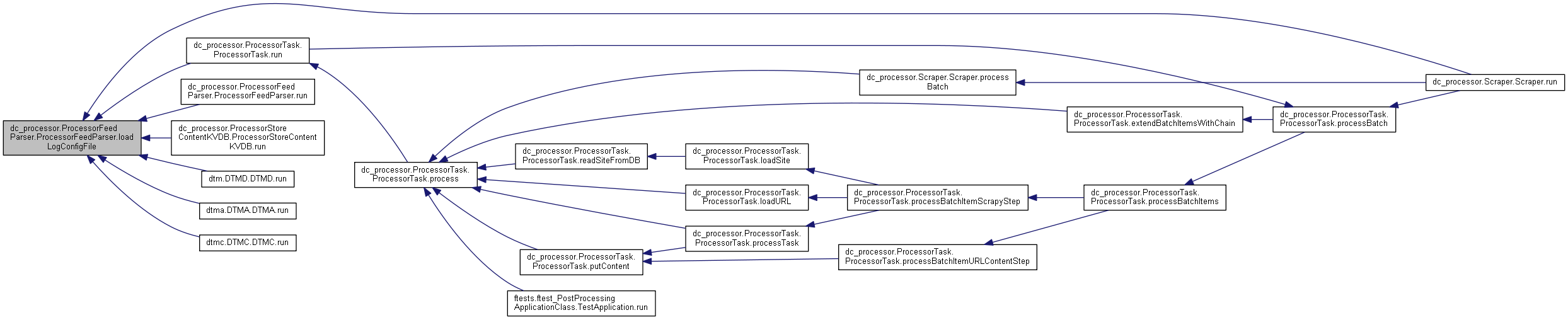

| def | loadLogConfigFile (self) |

| def | loadOptions (self) |

| def | getExitCode (self) |

Detailed Description

Definition at line 31 of file ProcessorFeedParser.py.

Constructor & Destructor Documentation

◆ __init__()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.__init__ | ( | self, | |

usageModel = APP_CONSTS.APP_USAGE_MODEL_PROCESS, |

|||

configFile = None, |

|||

logger = None, |

|||

inputData = None |

|||

| ) |

Definition at line 43 of file ProcessorFeedParser.py.

Member Function Documentation

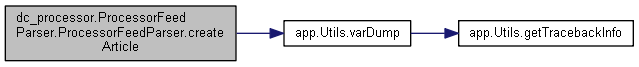

◆ createArticle()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.createArticle | ( | self | ) |

Definition at line 113 of file ProcessorFeedParser.py.

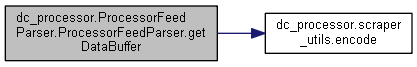

◆ getDataBuffer()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.getDataBuffer | ( | self, | |

| data | |||

| ) |

getDataBuffer prepare data buffer

Definition at line 253 of file ProcessorFeedParser.py.

◆ getExitCode()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.getExitCode | ( | self | ) |

Definition at line 417 of file ProcessorFeedParser.py.

◆ getQueryPrefix()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.getQueryPrefix | ( | self | ) |

For.

Definition at line 240 of file ProcessorFeedParser.py.

◆ loadConfig()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.loadConfig | ( | self | ) |

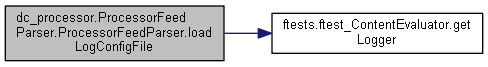

◆ loadLogConfigFile()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.loadLogConfigFile | ( | self | ) |

Definition at line 379 of file ProcessorFeedParser.py.

◆ loadOptions()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.loadOptions | ( | self | ) |

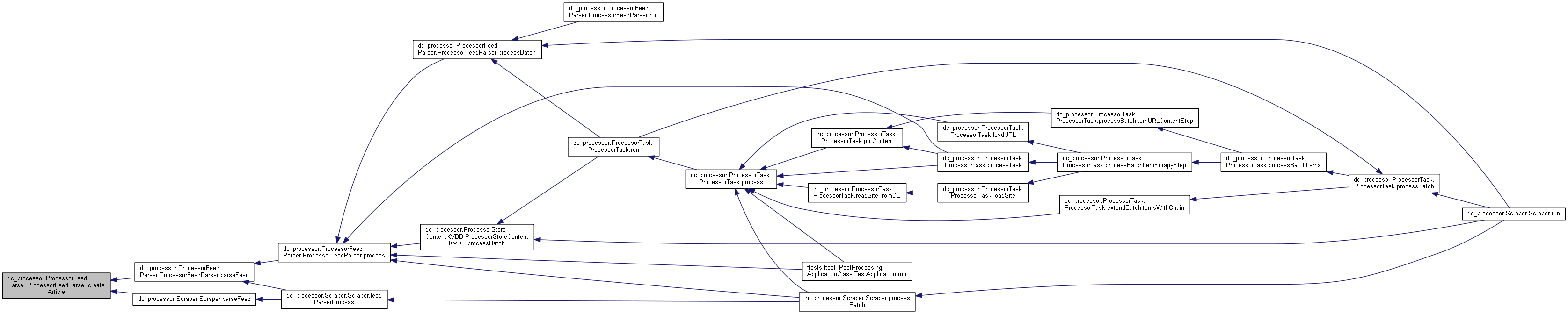

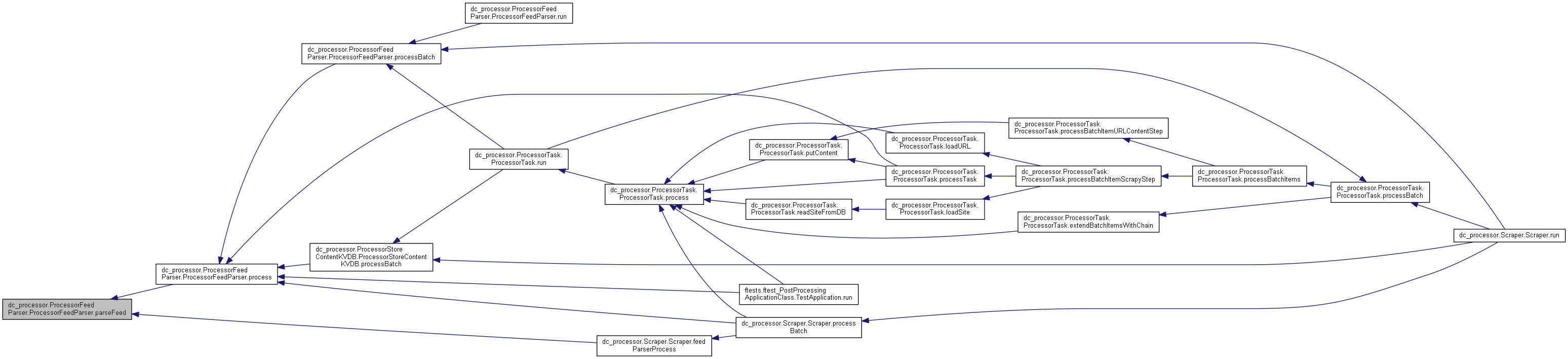

◆ parseFeed()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.parseFeed | ( | self | ) |

Definition at line 197 of file ProcessorFeedParser.py.

◆ process()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.process | ( | self | ) |

Definition at line 213 of file ProcessorFeedParser.py.

◆ processBatch()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.processBatch | ( | self | ) |

Definition at line 304 of file ProcessorFeedParser.py.

◆ putArticleToDB()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.putArticleToDB | ( | self, | |

| result | |||

| ) |

Definition at line 264 of file ProcessorFeedParser.py.

◆ run()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.run | ( | self | ) |

◆ setup()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.setup | ( | self | ) |

Definition at line 73 of file ProcessorFeedParser.py.

◆ storeXPath()

| def dc_processor.ProcessorFeedParser.ProcessorFeedParser.storeXPath | ( | self | ) |

storeXpath

Definition at line 338 of file ProcessorFeedParser.py.

Member Data Documentation

◆ article

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.article |

Definition at line 59 of file ProcessorFeedParser.py.

◆ articles_tbl

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.articles_tbl |

Definition at line 61 of file ProcessorFeedParser.py.

◆ config

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.config |

Definition at line 364 of file ProcessorFeedParser.py.

◆ config_db_dir

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.config_db_dir |

Definition at line 50 of file ProcessorFeedParser.py.

◆ configFile

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.configFile |

Definition at line 66 of file ProcessorFeedParser.py.

◆ db_engine

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.db_engine |

Definition at line 58 of file ProcessorFeedParser.py.

◆ entry

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.entry |

Definition at line 52 of file ProcessorFeedParser.py.

◆ exit_code

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.exit_code |

Definition at line 48 of file ProcessorFeedParser.py.

◆ input_data

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.input_data |

Definition at line 60 of file ProcessorFeedParser.py.

◆ logger

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.logger |

Definition at line 49 of file ProcessorFeedParser.py.

◆ output_data

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.output_data |

Definition at line 67 of file ProcessorFeedParser.py.

◆ PRAGMA_journal_mode

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.PRAGMA_journal_mode |

Definition at line 63 of file ProcessorFeedParser.py.

◆ PRAGMA_synchronous

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.PRAGMA_synchronous |

Definition at line 62 of file ProcessorFeedParser.py.

◆ PRAGMA_temp_store

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.PRAGMA_temp_store |

Definition at line 64 of file ProcessorFeedParser.py.

◆ processedContent

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.processedContent |

Definition at line 56 of file ProcessorFeedParser.py.

◆ pubdate

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.pubdate |

Definition at line 55 of file ProcessorFeedParser.py.

◆ sqliteTimeout

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.sqliteTimeout |

Definition at line 51 of file ProcessorFeedParser.py.

◆ store_xpath

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.store_xpath |

Definition at line 57 of file ProcessorFeedParser.py.

◆ tagsCount

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.tagsCount |

Definition at line 53 of file ProcessorFeedParser.py.

◆ tagsMask

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.tagsMask |

Definition at line 54 of file ProcessorFeedParser.py.

◆ usageModel

| dc_processor.ProcessorFeedParser.ProcessorFeedParser.usageModel |

Definition at line 65 of file ProcessorFeedParser.py.

The documentation for this class was generated from the following file:

- sources/hce/dc_processor/ProcessorFeedParser.py