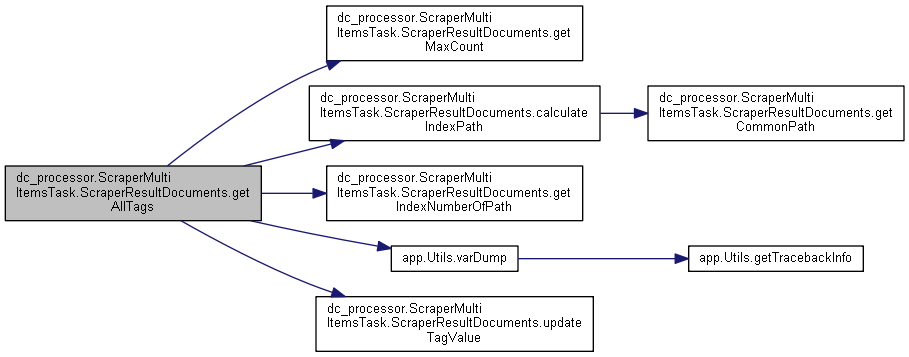

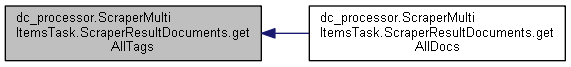

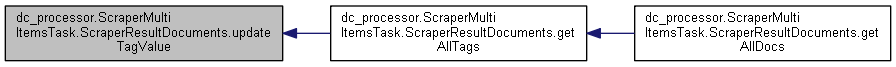

264 def getAllTags(self, mandatoryTags, logger=None):

267 count = self.getMaxCount(self.docs)

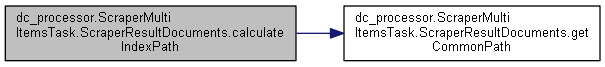

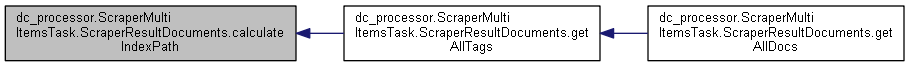

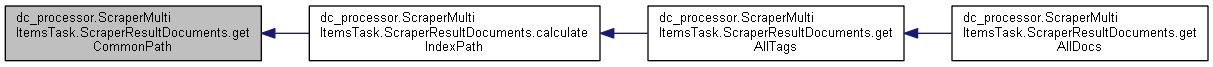

270 indexPath = self.calculateIndexPath(self.etree, logger)

271 if logger

is not None:

272 logger.info(

'Calculated indexPath: ' + str(indexPath))

274 if logger

is not None:

275 for key

in self.etree:

276 logger.debug(

'len(self.etree.get(' + str(key) +

') = ' + str(len(self.etree.get(key))))

277 for key

in self.docs:

278 logger.debug(

'len(self.docs.get(' + str(key) +

') = ' + str(len(self.docs.get(key))))

281 for index

in range(self.getMaxCount(self.etree)):

282 localRes = Result(

None, self.urlId)

283 resultList.append(localRes)

285 if logger

is not None:

286 logger.debug(

'count = ' + str(count))

287 logger.debug(

'len(resultList) = ' + str(len(resultList)))

289 for key

in self.docs.keys():

290 for index

in range(len(self.docs.get(key))):

291 if logger

is not None:

292 logger.debug(

'==== key: ' + str(key) +

' index: ' + str(index) +

' ====')

294 if len(self.etree.get(key)) > index:

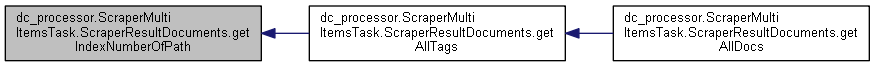

295 number = int(self.getIndexNumberOfPath(indexPath, self.etree.get(key)[index], logger))

296 if logger

is not None:

297 logger.debug(

'number = ' + str(number) +

' self.docs.get(' + str(key) +

')[' + str(index) +

'].tags: ' + \

298 varDump(self.docs.get(key)[index].tags))

300 if int(number) > 0

and int(number) <= len(self.docs.get(key)):

301 if resultList[int(number) - 1].tags.has_key(key):

302 result = self.updateTagValue(resultList[int(number) - 1], self.docs.get(key)[index].tags, key)

303 resultList[int(number) - 1].tags.update(result.tags)

305 resultList[int(number) - 1].tags.update({key:self.docs.get(key)[index].tags[key]})

307 if logger

is not None:

308 logger.debug(

"resultList[" + str(int(number) - 1) +

"].tags.update({" + str(key) +

":self.docs.get(" + \

309 str(key) +

")[" + str(index) +

"].tags[" + str(key) +

"]})")

311 for index

in range(0, len(resultList)):

314 for key

in self.docs.keys():

315 if not resultList[index].tags.has_key(key)

and bool(mandatoryTags[key])

is True:

319 if resultList[index].tags.has_key(key):

320 countSelected = countSelected + 1

322 if countSelected == 0:

326 resTags.append(resultList[index])

328 if len(resTags) == 0:

329 resTags.append(Result(

None, self.urlId))

def varDump(obj, stringify=True, strTypeMaxLen=256, strTypeCutSuffix='...', stringifyType=1, ignoreErrors=False, objectsHash=None, depth=0, indent=2, ensure_ascii=False, maxDepth=10)