1458 self.__checkInputData(self.input_data)

1460 self.logger.info(

'Start processing on BatchItem from Batch: ' + str(self.input_data.batchId))

1463 self.__fillProfilerMessageList(self.input_data)

1464 self.logger.debug(

"self.inputData:\n%s",

varDump(self.input_data))

1467 self.outputFormat = self.__getOutputFormat(self.input_data)

1470 altTagsMask = self.__getAltTagsMask(self.input_data)

1473 properties = self.__getPropertiesFromInputData(self.input_data)

1474 if properties

is not None:

1475 self.properties = properties

1477 algorithmName = self.properties[CONSTS.ALGORITHM_KEY][CONSTS.ALGORITHM_NAME_KEY]

1479 self.logger.debug(

"Algorithm : %s" % algorithmName)

1480 if self.usageModel == APP_CONSTS.APP_USAGE_MODEL_PROCESS:

1481 Utils.storePickleOnDisk(self.input_data, self.ENV_SCRAPER_STORE_PATH,

"scraper.in." + \

1482 str(self.input_data.urlId))

1485 sys.stdout = open(

"/dev/null",

"wb")

1491 urlHost = self.calcUrlDomainCrc(self.input_data.url)

1492 self.logger.info(

'urlHost: ' + str(urlHost))

1494 self.extractors = self.__loadExtractors(algorithmName, config, urlHost)

1498 self.logger.info(

"input_data url: %s, urlId: %s, siteId: %s", str(self.input_data.url), str(self.input_data.urlId),

1499 str(self.input_data.siteId))

1507 self.itr = iter(sorted(self.extractors, key=

lambda extractor: extractor.rank, reverse=

True))

1508 self.logger.debug(

"Extractors: %s" %

varDump(self.itr))

1511 responses = self.templateExtraction(config, urlHost)

1513 if CONSTS.MEDIA_LIMITS_NAME

in self.input_data.batch_item.properties:

1514 self.logger.debug(

"Found property '%s'", str(CONSTS.MEDIA_LIMITS_NAME))

1515 self.mediaLimitsHandler = MediaLimitsHandler(self.input_data.batch_item.properties[CONSTS.MEDIA_LIMITS_NAME])

1518 scraperResponseList = []

1519 for response

in responses:

1520 if response

is not None:

1521 response.stripResult()

1524 if algorithmName != CONSTS.PROCESS_ALGORITHM_REGULAR:

1525 self.adjustTitle(response)

1526 self.adjustLinkURL(response)

1527 self.adjustPartialReferences(response)

1528 self.logger.debug(

"PDate: %s" % str(self.input_data.batch_item.urlObj.pDate))

1529 self.logger.debug(

"PDate type: %s" % str(

type(self.input_data.batch_item.urlObj.pDate)))

1532 self.preparseResponse(response)

1534 self.logger.debug(

'>>>>> self.properties = ' +

varDump(self.properties))

1538 pdateSourceMask = APP_CONSTS.PDATE_SOURCES_MASK_BIT_DEFAULT

1539 pdateSourceMaskOverwrite = APP_CONSTS.PDATE_SOURCES_MASK_OVERWRITE_DEFAULT

1542 if APP_CONSTS.PDATE_SOURCES_MASK_PROP_NAME

in self.input_data.batch_item.properties:

1543 pdateSourceMask = int(self.input_data.batch_item.properties[APP_CONSTS.PDATE_SOURCES_MASK_PROP_NAME])

1546 if APP_CONSTS.PDATE_SOURCES_MASK_OVERWRITE_PROP_NAME

in self.input_data.batch_item.properties:

1547 pdateSourceMaskOverwrite = \

1548 int(self.input_data.batch_item.properties[APP_CONSTS.PDATE_SOURCES_MASK_OVERWRITE_PROP_NAME])

1550 self.logger.debug(

'pdateSourceMask = %s, pdateSourceMaskOverwrite = %s',

1551 str(pdateSourceMask), str(pdateSourceMaskOverwrite))

1553 self.logger.debug(

"!!! self.input_data.batch_item.urlObj.pDate = " + str(self.input_data.batch_item.urlObj.pDate))

1557 if pdateSourceMask & APP_CONSTS.PDATE_SOURCES_MASK_RSS_FEED:

1558 if (pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_RSS_FEED)

or \

1559 not pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_RSS_FEED:

1560 self.pubdate, timezone = self.extractPubdateRssFeed(self.input_data.siteId, self.input_data.url)

1563 if CONSTS.TAG_DC_DATE

in response.tags

and pdateSourceMask & APP_CONSTS.PDATE_SOURCES_MASK_DC_DATE:

1564 if (pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_DC_DATE

and self.pubdate

is None)

or \

1565 not pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_DC_DATE:

1566 if CONSTS.TAG_PUB_DATE

not in response.tags

or \

1567 (isinstance(response.tags[CONSTS.TAG_PUB_DATE][

"data"], basestring)

and \

1568 response.tags[CONSTS.TAG_PUB_DATE][

"data"].strip() ==

""):

1569 response.tags[CONSTS.TAG_PUB_DATE] = copy.deepcopy(response.tags[CONSTS.TAG_DC_DATE])

1570 response.tags[CONSTS.TAG_PUB_DATE][

"name"] = CONSTS.TAG_PUB_DATE

1571 if len(response.tags[CONSTS.TAG_PUB_DATE]) > 0

and response.tags[CONSTS.TAG_PUB_DATE][0]:

1572 self.pubdate = response.tags[CONSTS.TAG_PUB_DATE][0]

1573 self.logger.debug(

"Pubdate from 'dc_date': " + str(self.pubdate))

1577 if pdateSourceMask & APP_CONSTS.PDATE_SOURCES_MASK_PUBDATE:

1578 if (pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_PUBDATE

and self.pubdate

is None)

or \

1579 not pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_PUBDATE:

1580 pubdate, timezone = self.normalizeDatetime(response, algorithmName)

1581 if pubdate

is not None:

1582 self.pubdate = pubdate

1583 self.logger.debug(

"Pubdate from 'pubdate': " + str(self.pubdate))

1586 if pdateSourceMask & APP_CONSTS.PDATE_SOURCES_MASK_NOW:

1587 if (pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_NOW

and self.pubdate

is None)

or \

1588 not pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_NOW:

1589 self.pubdate = SQLExpression(

"NOW()")

1590 self.logger.debug(

"Pubdate from 'SQL NOW()': " + str(self.pubdate))

1593 if pdateSourceMask & APP_CONSTS.PDATE_SOURCES_MASK_SQL_EXPRESSION

and \

1594 APP_CONSTS.PDATE_SOURCES_EXPRESSION_PROP_NAME

in self.properties:

1595 if (pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_SQL_EXPRESSION

and self.pubdate

is None)

or \

1596 not pdateSourceMaskOverwrite & APP_CONSTS.PDATE_SOURCES_MASK_SQL_EXPRESSION:

1597 self.pubdate = SQLExpression(str(self.properties[APP_CONSTS.PDATE_SOURCES_EXPRESSION_PROP_NAME]))

1598 self.logger.debug(

"Pubdate from 'sql expression': " + str(self.pubdate))

1601 self.pubdate = self.pubdateMonthOrder(self.pubdate, self.input_data.batch_item.properties, self.input_data.url)

1604 self.input_data.batch_item.urlObj.pDate = self.pubdate

1605 self.pubdate = FieldsSQLExpressionEvaluator.evaluatePDateTime(self.input_data.batch_item.properties,

1607 self.input_data.batch_item.urlObj,

1612 self.pubdate, timezone = self.pubdateTransform(self.pubdate,

1614 self.input_data.batch_item.properties,

1615 self.input_data.url)

1618 self.addCustomTag(result=response, tag_name=CONSTS.TAG_PUBDATE_TZ, tag_value=[timezone])

1620 if "pubdate" in response.tags

and "data" in response.tags[

"pubdate"]

and \

1621 len(response.tags[

"pubdate"][

"data"]) > 0:

1622 response.tags[

"pubdate"][

"data"][0] = self.pubdate

1624 if self.outputFormat

is None:

1625 self.logger.debug(

">>> Warning, can't extract output format")

1627 self.formatOutputData(response, self.outputFormat)

1629 response.recalcTagMaskCount(

None, altTagsMask)

1631 self.logger.debug(

"response.tagsCount: " + str(response.tagsCount) + \

1632 " response.tagsMasks: " + str(response.tagsMask) + \

1633 "\n>>> Resp: " +

varDump(response))

1637 if len(responses) > 0:

1638 startTime = responses[0].start

1640 finishTime = time.time()

1642 for response

in responses:

1643 response.start = startTime

1644 response.finish = finishTime

1645 response.data[

"time"] =

"%s" % str(finishTime - startTime)

1647 response = self.applyHTTPRedirectLink(self.input_data.batch_item.siteId, self.input_data.batch_item.urlObj.url,

1648 self.input_data.batch_item.properties, response)

1651 processedContent = self.getProcessedContent(response)

1652 scraperResponseList.append(ScraperResponse(response.tagsCount, response.tagsMask, self.pubdate, \

1653 processedContent, self.errorMask))

1655 self.logger.debug(

'len(scraperResponseList): ' +

varDump(len(scraperResponseList)))

1656 self.logger.debug(

'maxURLsFromPage: ' + str(self.input_data.batch_item.urlObj.maxURLsFromPage))

1659 if self.input_data.batch_item.urlObj.maxURLsFromPage

is not None and \

1660 int(self.input_data.batch_item.urlObj.maxURLsFromPage) > 0

and \

1661 int(self.input_data.batch_item.urlObj.maxURLsFromPage) < len(scraperResponseList):

1662 self.logger.debug(

'>>> scraperResponseList 1')

1663 scraperResponseList = scraperResponseList[0: int(self.input_data.batch_item.urlObj.maxURLsFromPage)]

1664 self.logger.debug(

'>>> scraperResponseList 2')

1665 scraperResponseList[-1].errorMask |= APP_CONSTS.ERROR_MAX_URLS_FROM_PAGE

1666 self.logger.debug(

"Truncated scraper responces list because over limit 'maxURLsFromPage' = " + \

1667 str(self.input_data.batch_item.urlObj.maxURLsFromPage) +

" set errorMask = " + \

1668 str(APP_CONSTS.ERROR_MAX_URLS_FROM_PAGE))

1674 if self.usageModel == APP_CONSTS.APP_USAGE_MODEL_PROCESS:

1675 output_pickled_object = pickle.dumps(scraperResponseList)

1676 Utils.storePickleOnDisk(output_pickled_object, self.ENV_SCRAPER_STORE_PATH,

1677 "scraper.out." + str(self.input_data.urlId))

1678 print output_pickled_object

1681 self.output_data = scraperResponseList

1682 self.logger.debug(

'self.output_data: ' + str(

varDump(self.output_data)))

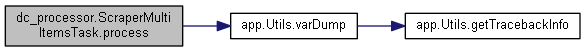

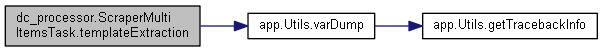

def process(self, config)

def varDump(obj, stringify=True, strTypeMaxLen=256, strTypeCutSuffix='...', stringifyType=1, ignoreErrors=False, objectsHash=None, depth=0, indent=2, ensure_ascii=False, maxDepth=10)