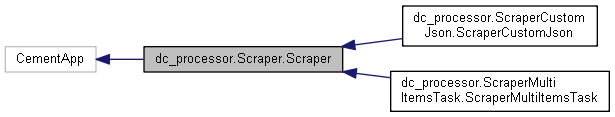

Classes | |

| class | Meta |

Public Member Functions | |

| def | __init__ (self, usageModel=APP_CONSTS.APP_USAGE_MODEL_PROCESS, configFile=None, logger=None, inputData=None) |

| def | setup (self) |

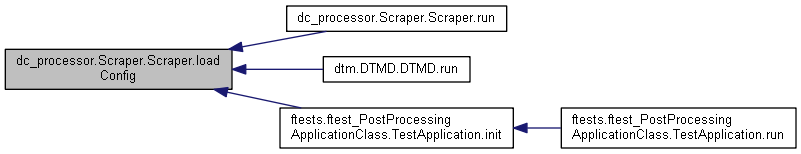

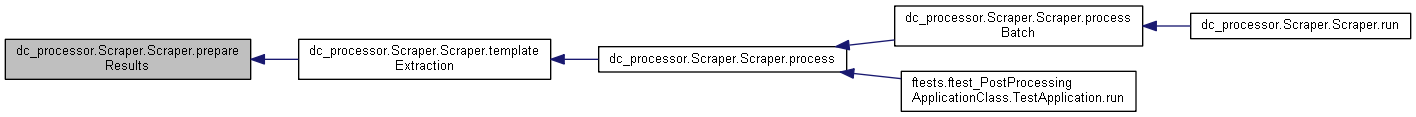

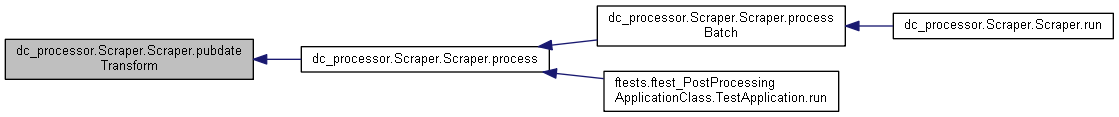

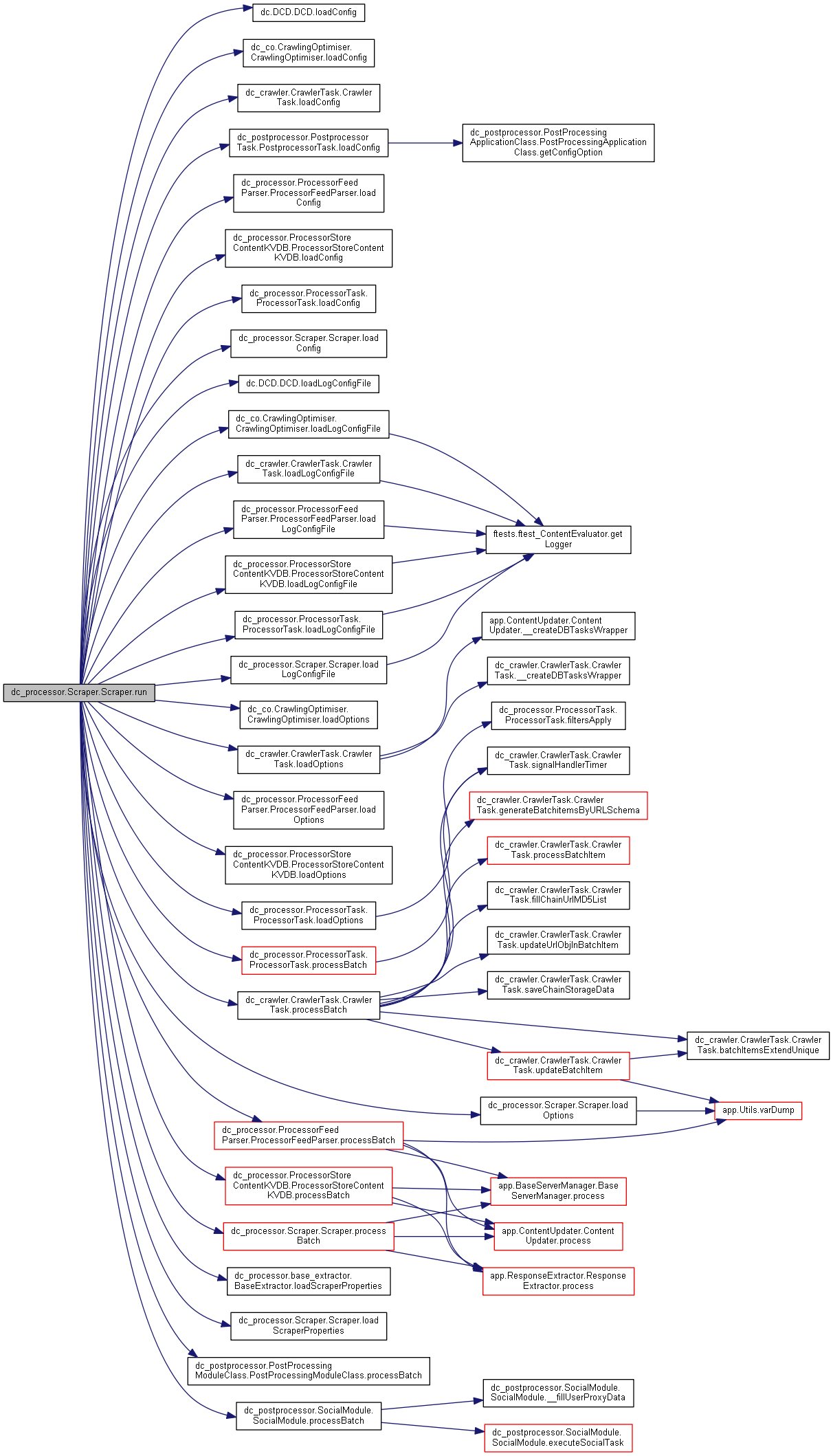

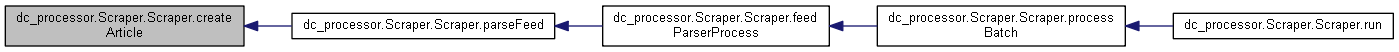

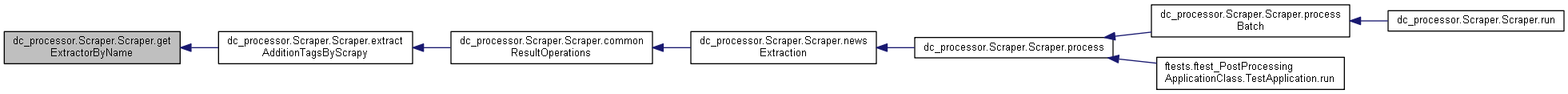

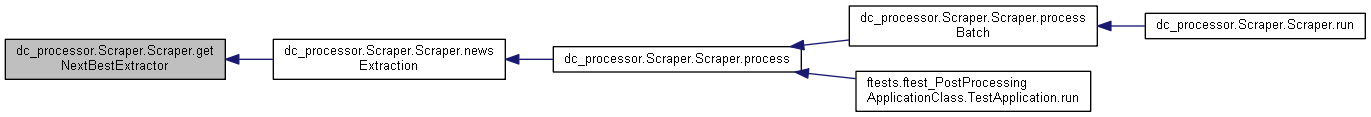

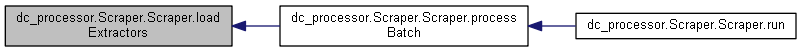

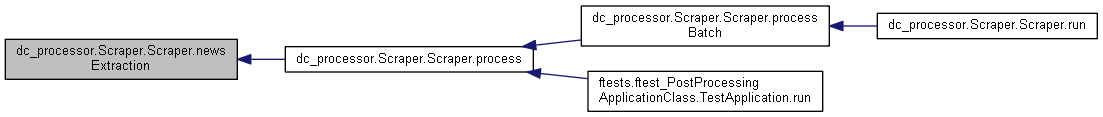

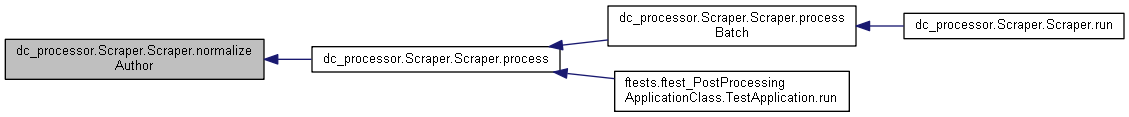

| def | run (self) |

| def | checkDOMElement (self, elem) |

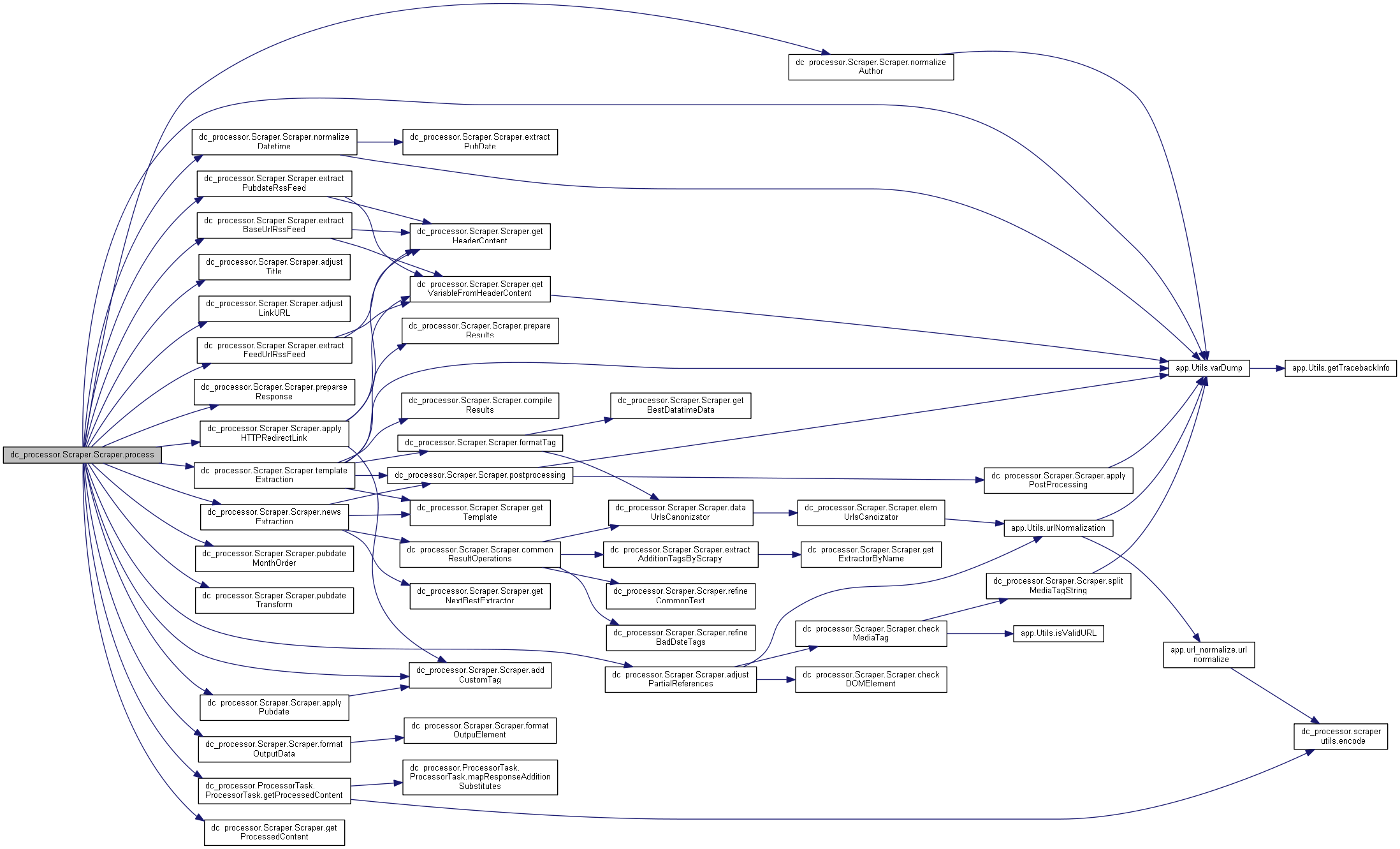

| def | adjustPartialReferences (self, response) |

| def | adjustTitle (self, response) |

| def | adjustLinkURL (self, response) |

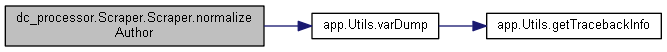

| def | normalizeAuthor (self, confProp, procProp, response) |

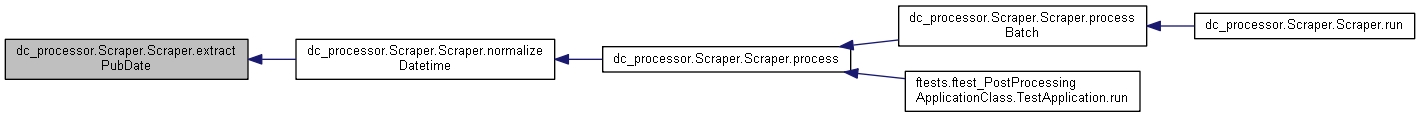

| def | normalizeDatetime (self, response, algorithmName) |

| def | extractPubDate (self, response, dataTagName) |

| def | pubdateTransform (self, rawPubdate, rawTimezone, properties, urlString) |

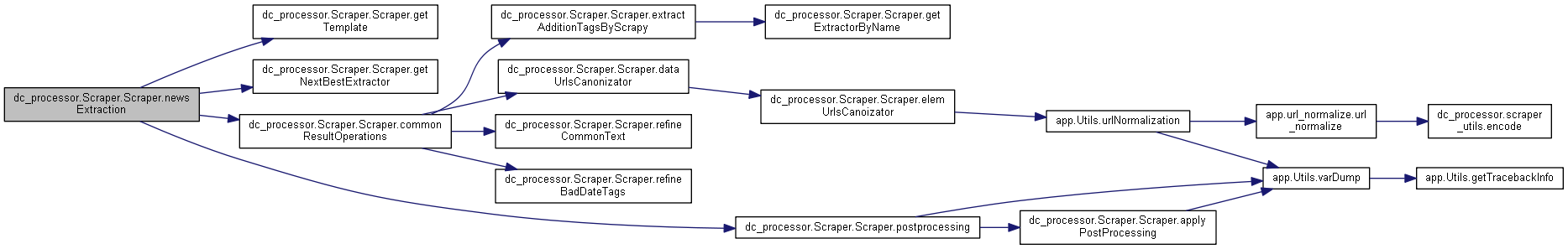

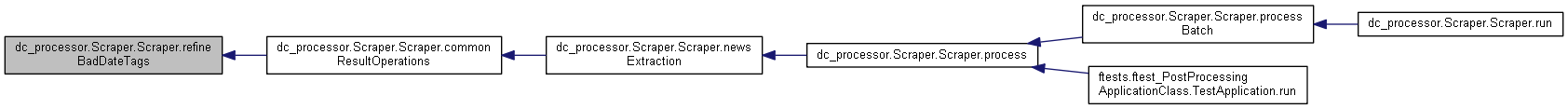

| def | refineBadDateTags (self, response) |

| def | calcUrlDomainCrc (self, url) |

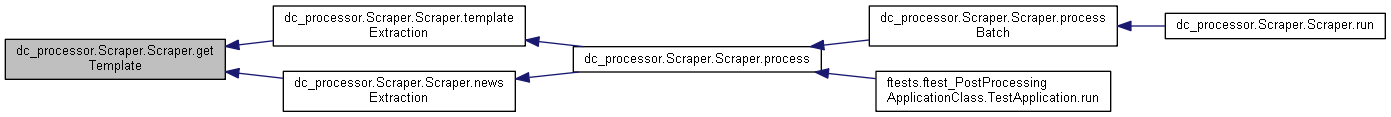

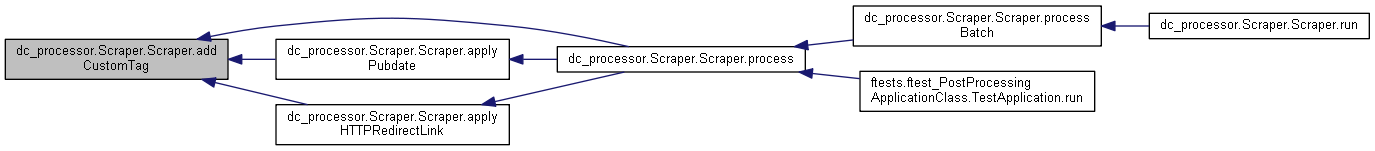

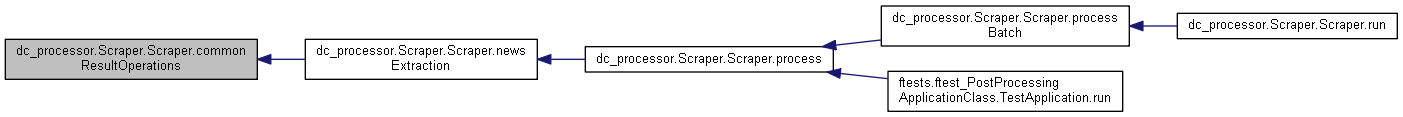

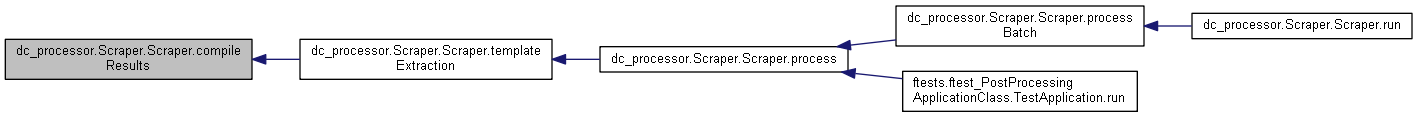

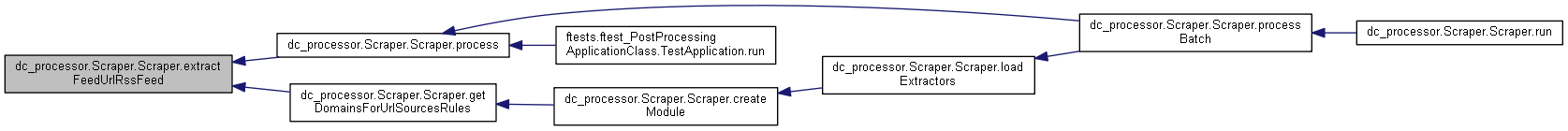

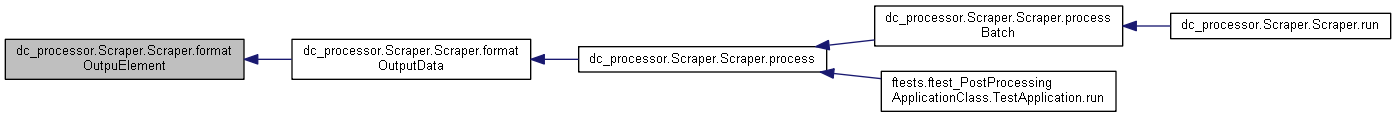

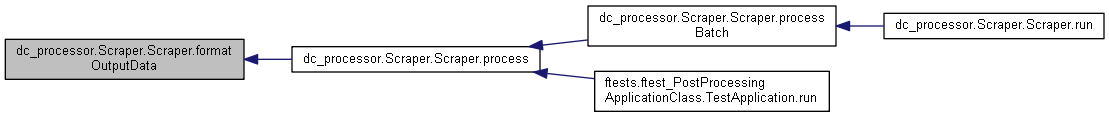

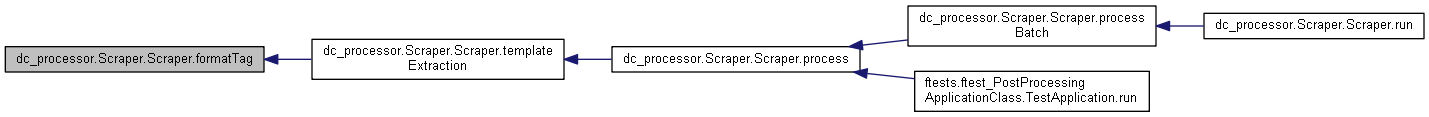

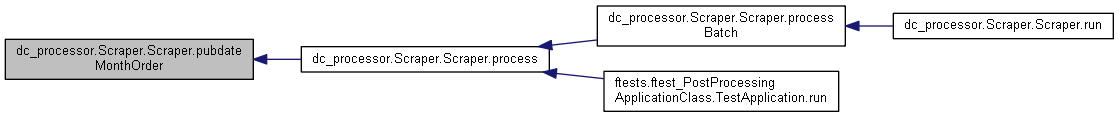

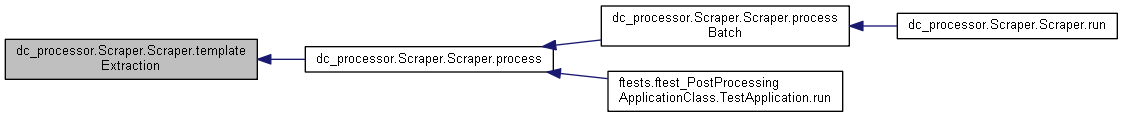

| def | process (self, config) |

| def | applyPubdate (self, response, pubdate) |

| def | preparseResponse (self, response) |

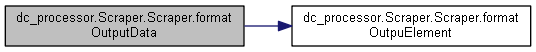

| def | formatOutpuElement (self, elem, localOutputFormat) |

| def | formatOutputData (self, response, localOutputFormat) |

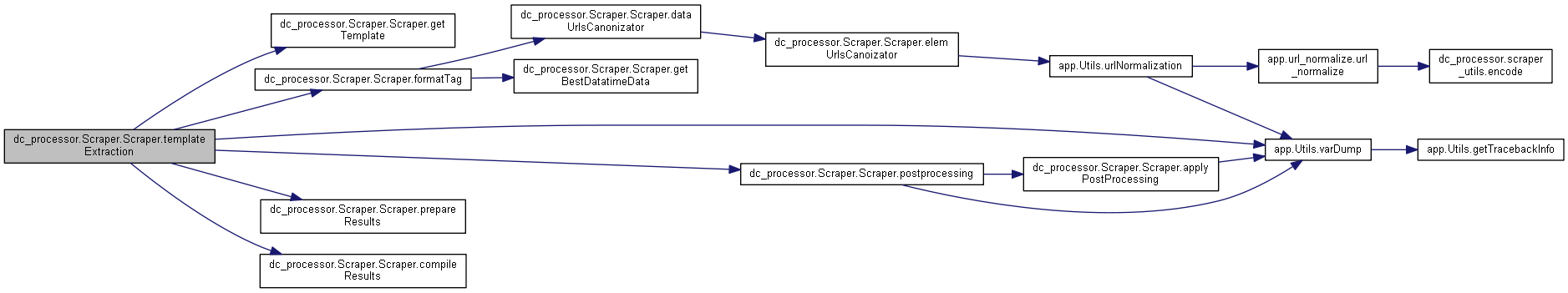

| def | getTemplate (self, explicit=True) |

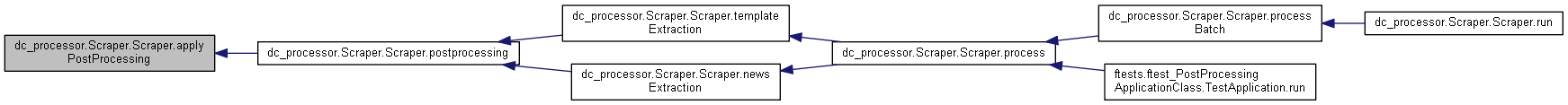

| def | postprocessing (self, result, rule, tag) |

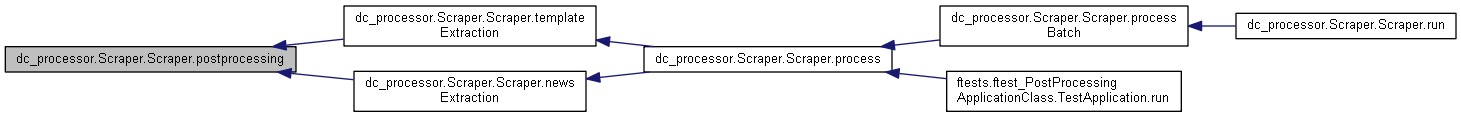

| def | templateExtraction (self, config, urlHost) |

| def | addCustomTag (self, result, tag_name, tag_value) |

| def | compileResults (self, result, resultsList, key, xPathPreparing=None) |

| def | prepareResults (self, resultsList) |

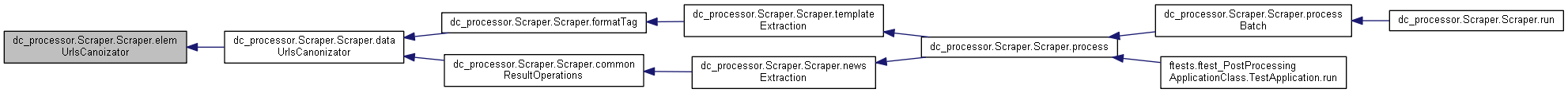

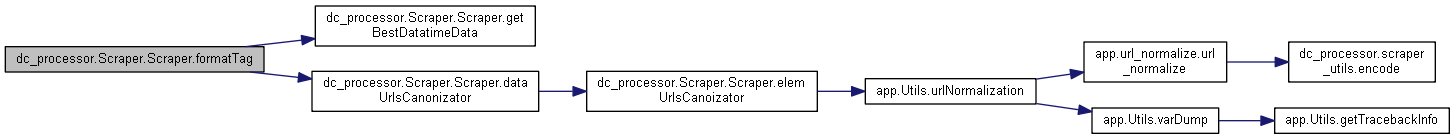

| def | elemUrlsCanoizator (self, data, baseUrl=None, firstDelim=' ', secondDelim=', useAdditionEncoding=False) |

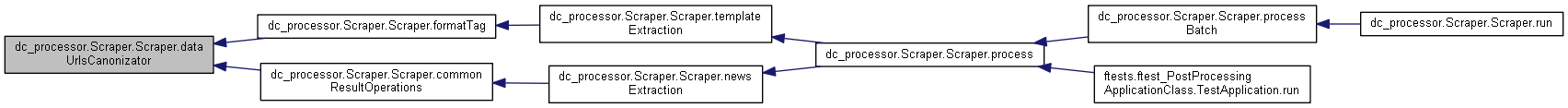

| def | dataUrlsCanonizator (self, data, baseUrl=None, useAdditionEncoding=False) |

| def | formatTag (self, result, path, key, pathDict, isExtract) |

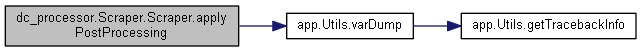

| def | applyPostProcessing (self, result, key, postProcessingRE) |

| def | processingHTMLData (self, htmlBuf, bufFormat) |

| def | getBestDatatimeData (self, data) |

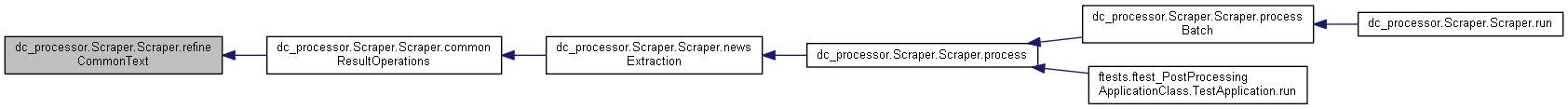

| def | newsExtraction (self) |

| def | commonResultOperations (self, result) |

| def | replaceLoopValue (self, buf, replaceFrom, replaceTo) |

| def | refineCommonText (self, tagName, result) |

| def | extractAdditionTagsByScrapy (self, localResult, key, tagsXpaths) |

| def | getNextBestExtractor (self) |

| def | getProcessedContent (self, result) |

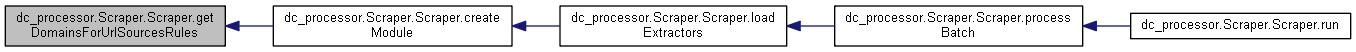

| def | loadExtractors (self) |

| def | processBatch (self) |

| def | loadConfig (self) |

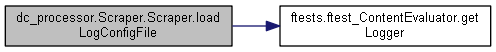

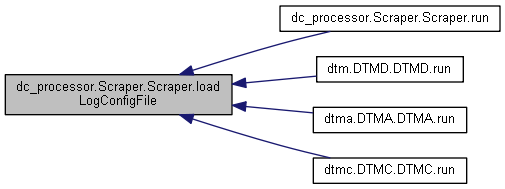

| def | loadLogConfigFile (self) |

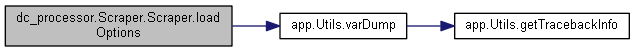

| def | loadOptions (self) |

| def | loadScraperProperties (self) |

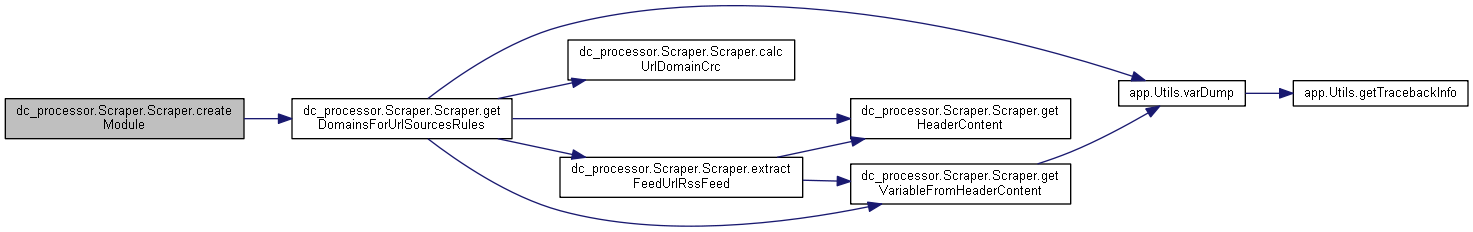

| def | createModule (self, module_name) |

| def | getExtractorByName (self, extractorName) |

| def | getExitCode (self) |

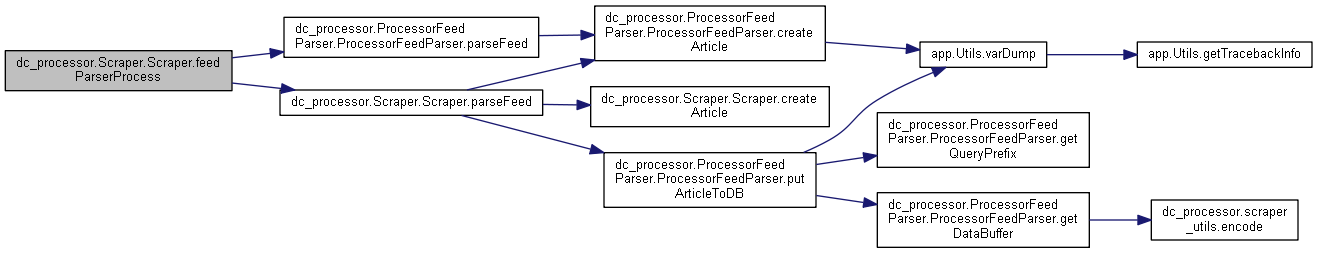

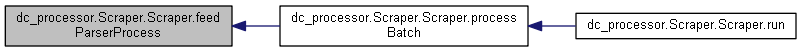

| def | feedParserProcess (self) |

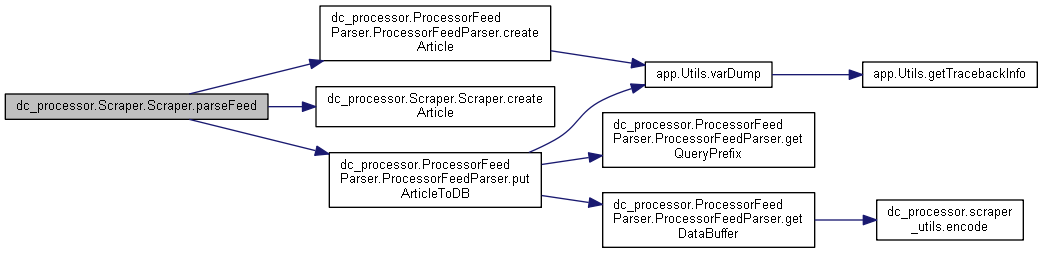

| def | createArticle (self) |

| def | parseFeed (self) |

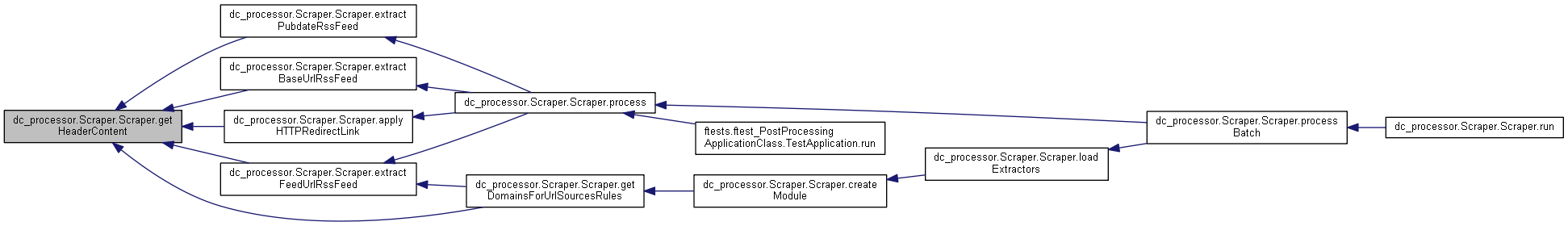

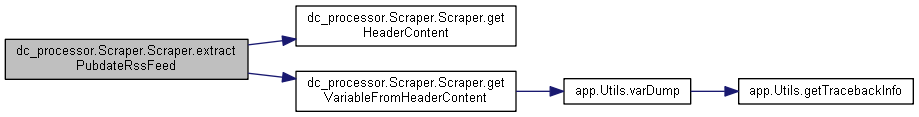

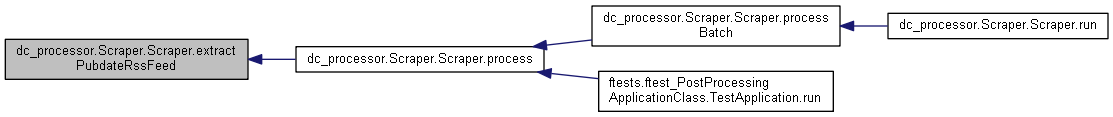

| def | extractPubdateRssFeed (self, siteId, url) |

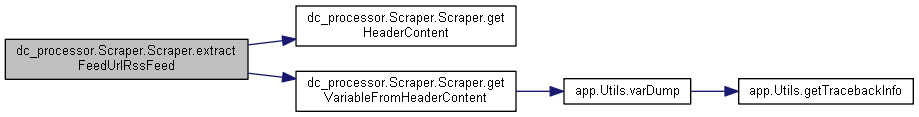

| def | extractFeedUrlRssFeed (self, siteId, url) |

| def | extractBaseUrlRssFeed (self, siteId, url) |

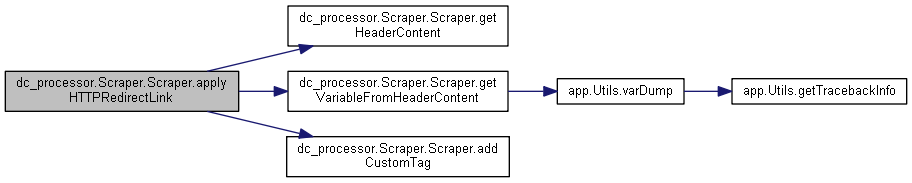

| def | getHeaderContent (self, siteId, url) |

| def | getVariableFromHeaderContent (self, headerContent, name, makeDecode=True) |

| def | pubdateMonthOrder (self, rawPubdate, properties, urlString) |

| def | checkMediaTag (self, urlStringMedia) |

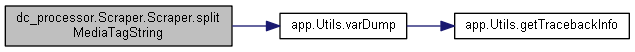

| def | splitMediaTagString (self, urlStringMedia) |

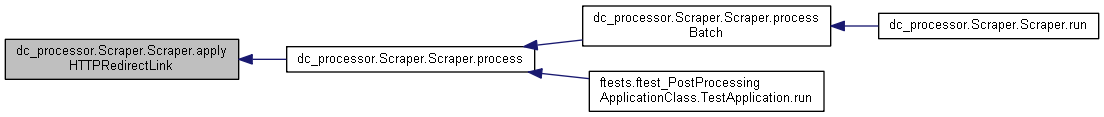

| def | applyHTTPRedirectLink (self, siteId, url, properties, response) |

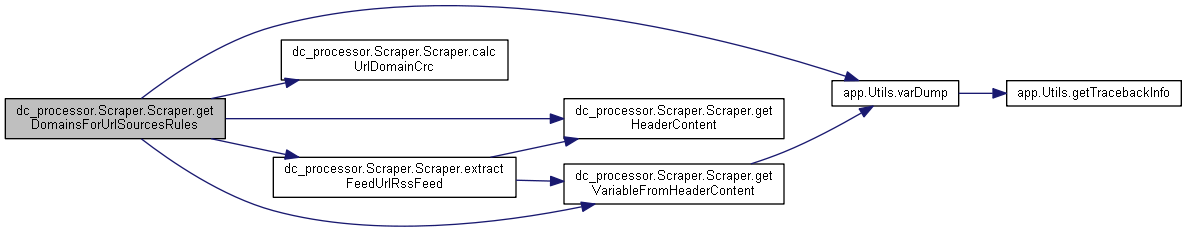

| def | getDomainsForUrlSourcesRules (self, urlSourcesRules) |

Public Attributes | |

| exitCode | |

| itr | |

| extractor | |

| extractors | |

| input_data | |

| logger | |

| sqliteTimeout | |

| scraperPropFileName | |

| properties | |

| algorithm_name | |

| pubdate | |

| response.tagsLangDetecting(self.properties[CONSTS.LANG_PROP_NAME]) More... | |

| message_queue | |

| entry | |

| article | |

| outputFormat | |

| errorMask | |

| metrics | |

| altTagsMask | |

| tagsCount | |

| tagsMask | |

| processedContent | |

| usageModel | |

| configFile | |

| output_data | |

| urlHost | |

| xpathSplitString | |

| useCurrentYear | |

| datetimeNewsNames | |

| datetimeTemplateTypes | |

| tagsTypes | |

| attrConditions | |

| dbWrapper | |

| mediaLimitsHandler | |

| urlSourcesRules | |

| tagReduceMask | |

| baseUrl | |

| config | |

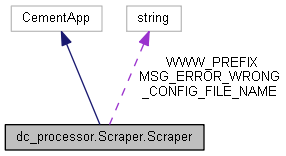

Static Public Attributes | |

| string | MSG_ERROR_WRONG_CONFIG_FILE_NAME = "Config file name is wrong" |

| string | WWW_PREFIX = "www." |

Detailed Description

Definition at line 106 of file Scraper.py.

Constructor & Destructor Documentation

◆ __init__()

| def dc_processor.Scraper.Scraper.__init__ | ( | self, | |

usageModel = APP_CONSTS.APP_USAGE_MODEL_PROCESS, |

|||

configFile = None, |

|||

logger = None, |

|||

inputData = None |

|||

| ) |

Definition at line 121 of file Scraper.py.

Member Function Documentation

◆ addCustomTag()

| def dc_processor.Scraper.Scraper.addCustomTag | ( | self, | |

| result, | |||

| tag_name, | |||

| tag_value | |||

| ) |

Definition at line 1006 of file Scraper.py.

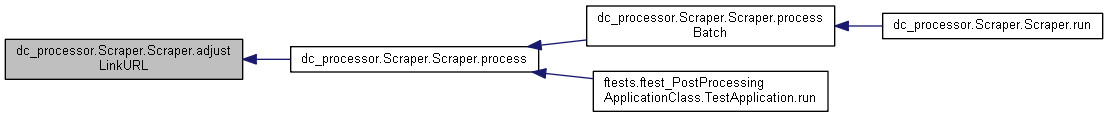

◆ adjustLinkURL()

| def dc_processor.Scraper.Scraper.adjustLinkURL | ( | self, | |

| response | |||

| ) |

Definition at line 329 of file Scraper.py.

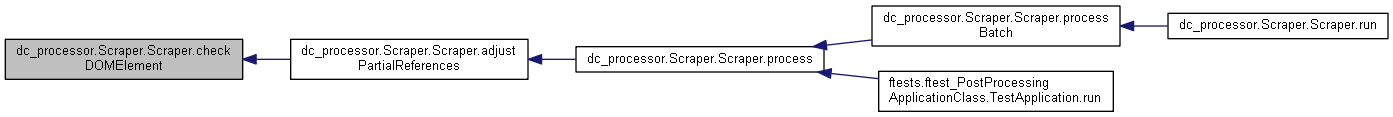

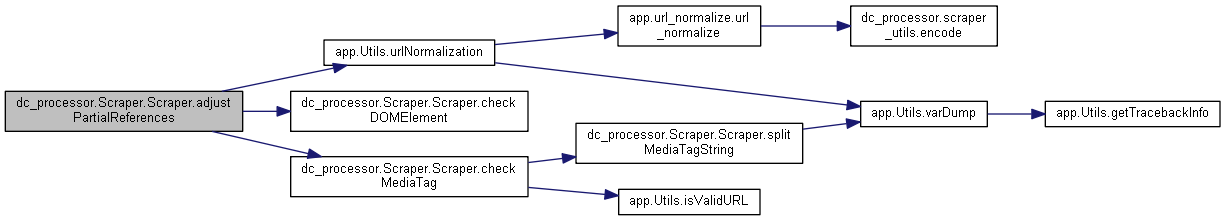

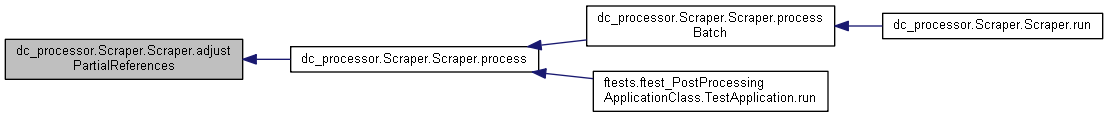

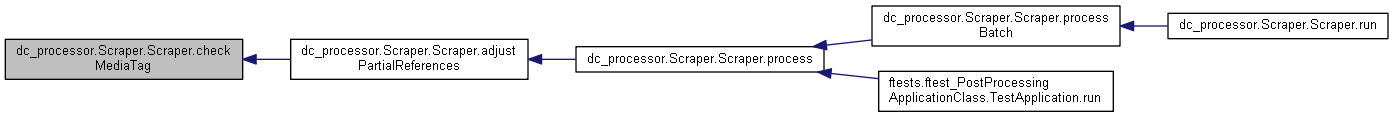

◆ adjustPartialReferences()

| def dc_processor.Scraper.Scraper.adjustPartialReferences | ( | self, | |

| response | |||

| ) |

Definition at line 214 of file Scraper.py.

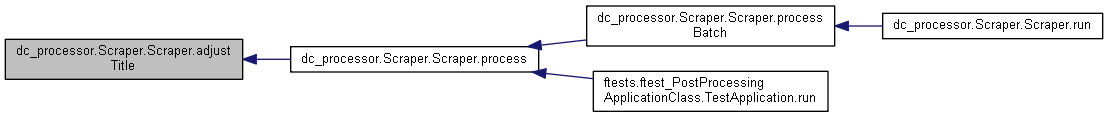

◆ adjustTitle()

| def dc_processor.Scraper.Scraper.adjustTitle | ( | self, | |

| response | |||

| ) |

Definition at line 303 of file Scraper.py.

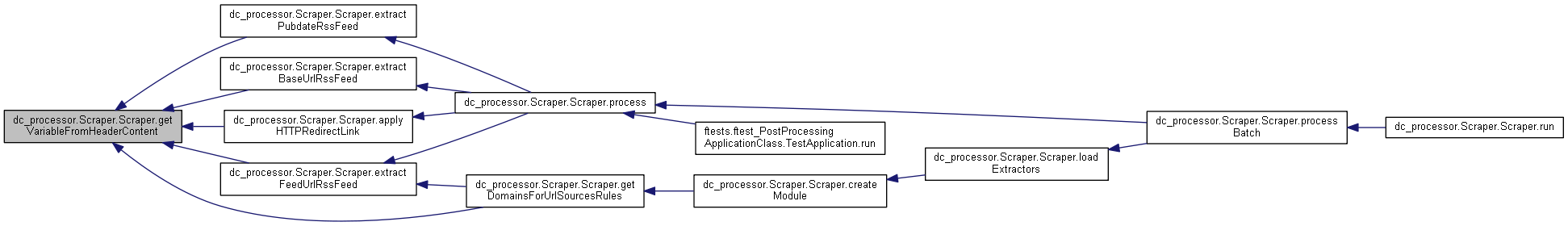

◆ applyHTTPRedirectLink()

| def dc_processor.Scraper.Scraper.applyHTTPRedirectLink | ( | self, | |

| siteId, | |||

| url, | |||

| properties, | |||

| response | |||

| ) |

Definition at line 2160 of file Scraper.py.

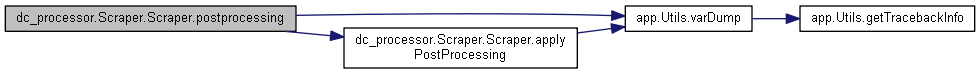

◆ applyPostProcessing()

| def dc_processor.Scraper.Scraper.applyPostProcessing | ( | self, | |

| result, | |||

| key, | |||

| postProcessingRE | |||

| ) |

Definition at line 1267 of file Scraper.py.

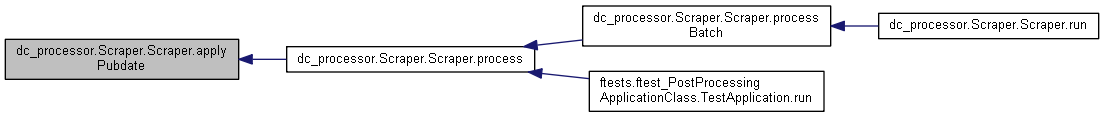

◆ applyPubdate()

| def dc_processor.Scraper.Scraper.applyPubdate | ( | self, | |

| response, | |||

| pubdate | |||

| ) |

Definition at line 818 of file Scraper.py.

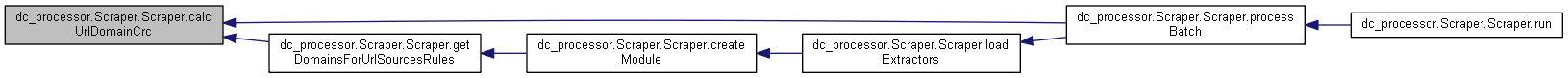

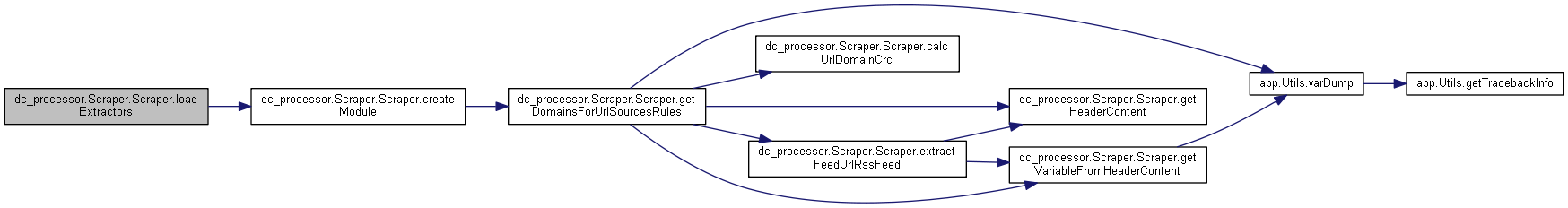

◆ calcUrlDomainCrc()

| def dc_processor.Scraper.Scraper.calcUrlDomainCrc | ( | self, | |

| url | |||

| ) |

◆ checkDOMElement()

| def dc_processor.Scraper.Scraper.checkDOMElement | ( | self, | |

| elem | |||

| ) |

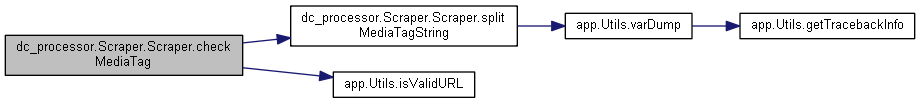

◆ checkMediaTag()

| def dc_processor.Scraper.Scraper.checkMediaTag | ( | self, | |

| urlStringMedia | |||

| ) |

Definition at line 2090 of file Scraper.py.

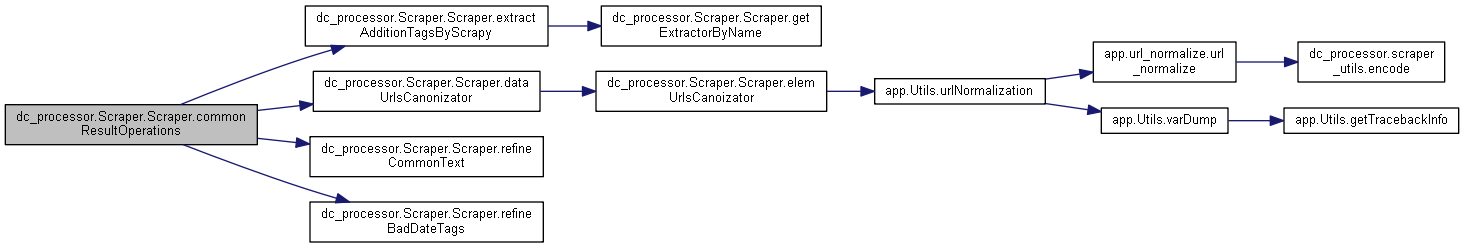

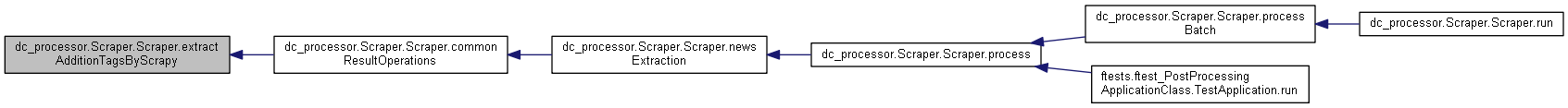

◆ commonResultOperations()

| def dc_processor.Scraper.Scraper.commonResultOperations | ( | self, | |

| result | |||

| ) |

Definition at line 1423 of file Scraper.py.

◆ compileResults()

| def dc_processor.Scraper.Scraper.compileResults | ( | self, | |

| result, | |||

| resultsList, | |||

| key, | |||

xPathPreparing = None |

|||

| ) |

Definition at line 1035 of file Scraper.py.

◆ createArticle()

| def dc_processor.Scraper.Scraper.createArticle | ( | self | ) |

Definition at line 1854 of file Scraper.py.

◆ createModule()

| def dc_processor.Scraper.Scraper.createModule | ( | self, | |

| module_name | |||

| ) |

Definition at line 1794 of file Scraper.py.

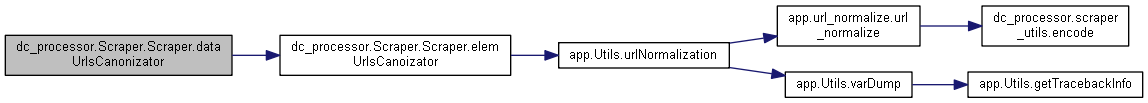

◆ dataUrlsCanonizator()

| def dc_processor.Scraper.Scraper.dataUrlsCanonizator | ( | self, | |

| data, | |||

baseUrl = None, |

|||

useAdditionEncoding = False |

|||

| ) |

Definition at line 1129 of file Scraper.py.

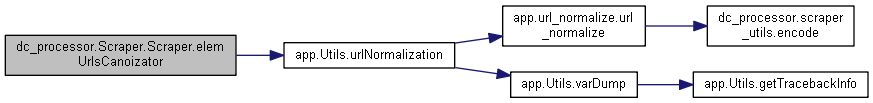

◆ elemUrlsCanoizator()

| def dc_processor.Scraper.Scraper.elemUrlsCanoizator | ( | self, | |

| data, | |||

baseUrl = None, |

|||

firstDelim = ' ', |

|||

secondDelim = ', |

|||

useAdditionEncoding = False |

|||

| ) |

Definition at line 1101 of file Scraper.py.

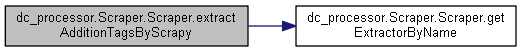

◆ extractAdditionTagsByScrapy()

| def dc_processor.Scraper.Scraper.extractAdditionTagsByScrapy | ( | self, | |

| localResult, | |||

| key, | |||

| tagsXpaths | |||

| ) |

Definition at line 1493 of file Scraper.py.

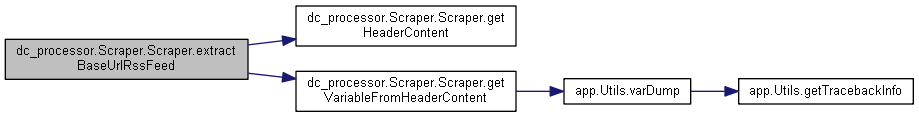

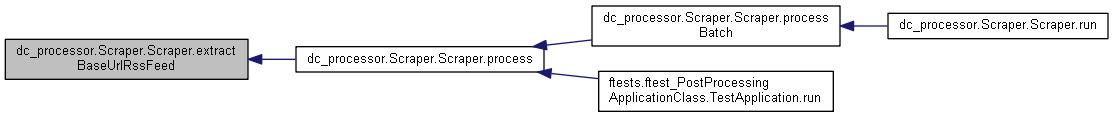

◆ extractBaseUrlRssFeed()

| def dc_processor.Scraper.Scraper.extractBaseUrlRssFeed | ( | self, | |

| siteId, | |||

| url | |||

| ) |

Definition at line 1965 of file Scraper.py.

◆ extractFeedUrlRssFeed()

| def dc_processor.Scraper.Scraper.extractFeedUrlRssFeed | ( | self, | |

| siteId, | |||

| url | |||

| ) |

Definition at line 1946 of file Scraper.py.

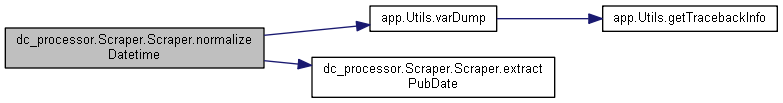

◆ extractPubDate()

| def dc_processor.Scraper.Scraper.extractPubDate | ( | self, | |

| response, | |||

| dataTagName | |||

| ) |

Definition at line 468 of file Scraper.py.

◆ extractPubdateRssFeed()

| def dc_processor.Scraper.Scraper.extractPubdateRssFeed | ( | self, | |

| siteId, | |||

| url | |||

| ) |

Definition at line 1916 of file Scraper.py.

◆ feedParserProcess()

| def dc_processor.Scraper.Scraper.feedParserProcess | ( | self | ) |

Definition at line 1832 of file Scraper.py.

◆ formatOutpuElement()

| def dc_processor.Scraper.Scraper.formatOutpuElement | ( | self, | |

| elem, | |||

| localOutputFormat | |||

| ) |

Definition at line 851 of file Scraper.py.

◆ formatOutputData()

| def dc_processor.Scraper.Scraper.formatOutputData | ( | self, | |

| response, | |||

| localOutputFormat | |||

| ) |

Definition at line 872 of file Scraper.py.

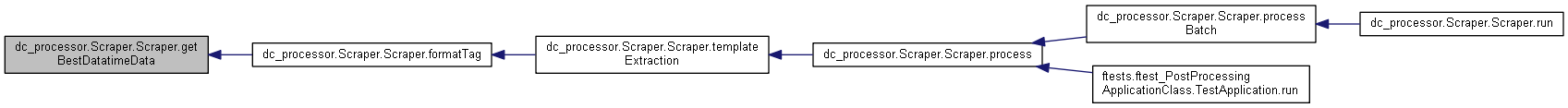

◆ formatTag()

| def dc_processor.Scraper.Scraper.formatTag | ( | self, | |

| result, | |||

| path, | |||

| key, | |||

| pathDict, | |||

| isExtract | |||

| ) |

Definition at line 1144 of file Scraper.py.

◆ getBestDatatimeData()

| def dc_processor.Scraper.Scraper.getBestDatatimeData | ( | self, | |

| data | |||

| ) |

◆ getDomainsForUrlSourcesRules()

| def dc_processor.Scraper.Scraper.getDomainsForUrlSourcesRules | ( | self, | |

| urlSourcesRules | |||

| ) |

Definition at line 2207 of file Scraper.py.

◆ getExitCode()

| def dc_processor.Scraper.Scraper.getExitCode | ( | self | ) |

Definition at line 1823 of file Scraper.py.

◆ getExtractorByName()

| def dc_processor.Scraper.Scraper.getExtractorByName | ( | self, | |

| extractorName | |||

| ) |

Definition at line 1814 of file Scraper.py.

◆ getHeaderContent()

| def dc_processor.Scraper.Scraper.getHeaderContent | ( | self, | |

| siteId, | |||

| url | |||

| ) |

◆ getNextBestExtractor()

| def dc_processor.Scraper.Scraper.getNextBestExtractor | ( | self | ) |

Definition at line 1511 of file Scraper.py.

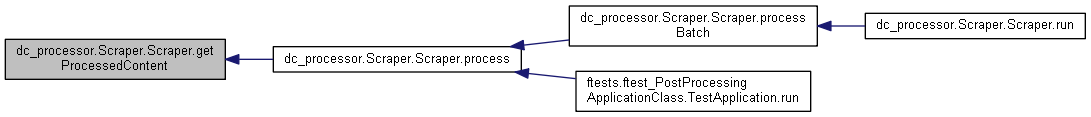

◆ getProcessedContent()

| def dc_processor.Scraper.Scraper.getProcessedContent | ( | self, | |

| result | |||

| ) |

Definition at line 1522 of file Scraper.py.

◆ getTemplate()

| def dc_processor.Scraper.Scraper.getTemplate | ( | self, | |

explicit = True |

|||

| ) |

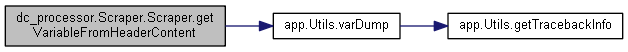

◆ getVariableFromHeaderContent()

| def dc_processor.Scraper.Scraper.getVariableFromHeaderContent | ( | self, | |

| headerContent, | |||

| name, | |||

makeDecode = True |

|||

| ) |

Definition at line 2008 of file Scraper.py.

◆ loadConfig()

| def dc_processor.Scraper.Scraper.loadConfig | ( | self | ) |

◆ loadExtractors()

| def dc_processor.Scraper.Scraper.loadExtractors | ( | self | ) |

Definition at line 1550 of file Scraper.py.

◆ loadLogConfigFile()

| def dc_processor.Scraper.Scraper.loadLogConfigFile | ( | self | ) |

Definition at line 1718 of file Scraper.py.

◆ loadOptions()

| def dc_processor.Scraper.Scraper.loadOptions | ( | self | ) |

Definition at line 1732 of file Scraper.py.

◆ loadScraperProperties()

| def dc_processor.Scraper.Scraper.loadScraperProperties | ( | self | ) |

Definition at line 1779 of file Scraper.py.

◆ newsExtraction()

| def dc_processor.Scraper.Scraper.newsExtraction | ( | self | ) |

Definition at line 1366 of file Scraper.py.

◆ normalizeAuthor()

| def dc_processor.Scraper.Scraper.normalizeAuthor | ( | self, | |

| confProp, | |||

| procProp, | |||

| response | |||

| ) |

Definition at line 366 of file Scraper.py.

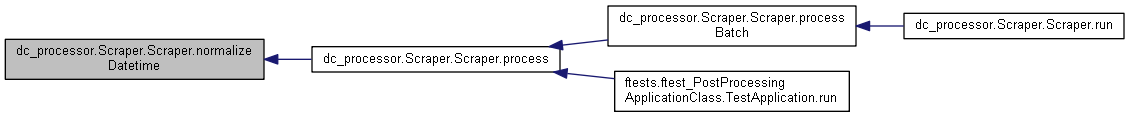

◆ normalizeDatetime()

| def dc_processor.Scraper.Scraper.normalizeDatetime | ( | self, | |

| response, | |||

| algorithmName | |||

| ) |

Definition at line 411 of file Scraper.py.

◆ parseFeed()

| def dc_processor.Scraper.Scraper.parseFeed | ( | self | ) |

Definition at line 1897 of file Scraper.py.

◆ postprocessing()

| def dc_processor.Scraper.Scraper.postprocessing | ( | self, | |

| result, | |||

| rule, | |||

| tag | |||

| ) |

Definition at line 899 of file Scraper.py.

◆ prepareResults()

| def dc_processor.Scraper.Scraper.prepareResults | ( | self, | |

| resultsList | |||

| ) |

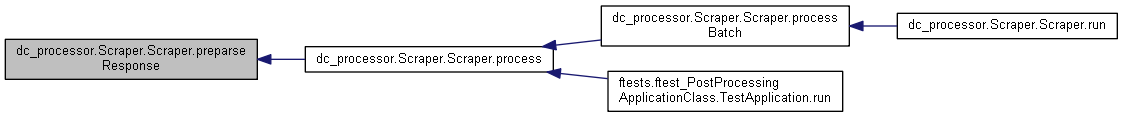

◆ preparseResponse()

| def dc_processor.Scraper.Scraper.preparseResponse | ( | self, | |

| response | |||

| ) |

Definition at line 842 of file Scraper.py.

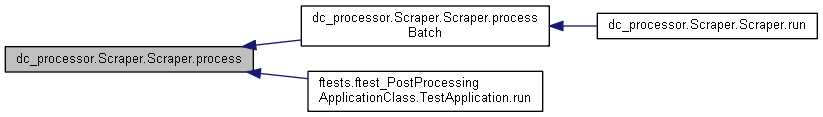

◆ process()

| def dc_processor.Scraper.Scraper.process | ( | self, | |

| config | |||

| ) |

Definition at line 599 of file Scraper.py.

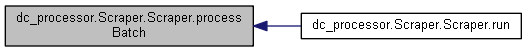

◆ processBatch()

| def dc_processor.Scraper.Scraper.processBatch | ( | self | ) |

Definition at line 1583 of file Scraper.py.

◆ processingHTMLData()

| def dc_processor.Scraper.Scraper.processingHTMLData | ( | self, | |

| htmlBuf, | |||

| bufFormat | |||

| ) |

Definition at line 1331 of file Scraper.py.

◆ pubdateMonthOrder()

| def dc_processor.Scraper.Scraper.pubdateMonthOrder | ( | self, | |

| rawPubdate, | |||

| properties, | |||

| urlString | |||

| ) |

Definition at line 2039 of file Scraper.py.

◆ pubdateTransform()

| def dc_processor.Scraper.Scraper.pubdateTransform | ( | self, | |

| rawPubdate, | |||

| rawTimezone, | |||

| properties, | |||

| urlString | |||

| ) |

◆ refineBadDateTags()

| def dc_processor.Scraper.Scraper.refineBadDateTags | ( | self, | |

| response | |||

| ) |

Definition at line 553 of file Scraper.py.

◆ refineCommonText()

| def dc_processor.Scraper.Scraper.refineCommonText | ( | self, | |

| tagName, | |||

| result | |||

| ) |

Definition at line 1448 of file Scraper.py.

◆ replaceLoopValue()

| def dc_processor.Scraper.Scraper.replaceLoopValue | ( | self, | |

| buf, | |||

| replaceFrom, | |||

| replaceTo | |||

| ) |

Definition at line 1439 of file Scraper.py.

◆ run()

| def dc_processor.Scraper.Scraper.run | ( | self | ) |

◆ setup()

| def dc_processor.Scraper.Scraper.setup | ( | self | ) |

Definition at line 166 of file Scraper.py.

◆ splitMediaTagString()

| def dc_processor.Scraper.Scraper.splitMediaTagString | ( | self, | |

| urlStringMedia | |||

| ) |

Definition at line 2132 of file Scraper.py.

◆ templateExtraction()

| def dc_processor.Scraper.Scraper.templateExtraction | ( | self, | |

| config, | |||

| urlHost | |||

| ) |

Definition at line 914 of file Scraper.py.

Member Data Documentation

◆ algorithm_name

| dc_processor.Scraper.Scraper.algorithm_name |

Definition at line 135 of file Scraper.py.

◆ altTagsMask

| dc_processor.Scraper.Scraper.altTagsMask |

Definition at line 143 of file Scraper.py.

◆ article

| dc_processor.Scraper.Scraper.article |

Definition at line 139 of file Scraper.py.

◆ attrConditions

| dc_processor.Scraper.Scraper.attrConditions |

Definition at line 156 of file Scraper.py.

◆ baseUrl

| dc_processor.Scraper.Scraper.baseUrl |

Definition at line 161 of file Scraper.py.

◆ config

| dc_processor.Scraper.Scraper.config |

Definition at line 1701 of file Scraper.py.

◆ configFile

| dc_processor.Scraper.Scraper.configFile |

Definition at line 148 of file Scraper.py.

◆ datetimeNewsNames

| dc_processor.Scraper.Scraper.datetimeNewsNames |

Definition at line 153 of file Scraper.py.

◆ datetimeTemplateTypes

| dc_processor.Scraper.Scraper.datetimeTemplateTypes |

Definition at line 154 of file Scraper.py.

◆ dbWrapper

| dc_processor.Scraper.Scraper.dbWrapper |

Definition at line 157 of file Scraper.py.

◆ entry

| dc_processor.Scraper.Scraper.entry |

Definition at line 138 of file Scraper.py.

◆ errorMask

| dc_processor.Scraper.Scraper.errorMask |

Definition at line 141 of file Scraper.py.

◆ exitCode

| dc_processor.Scraper.Scraper.exitCode |

Definition at line 126 of file Scraper.py.

◆ extractor

| dc_processor.Scraper.Scraper.extractor |

Definition at line 128 of file Scraper.py.

◆ extractors

| dc_processor.Scraper.Scraper.extractors |

Definition at line 129 of file Scraper.py.

◆ input_data

| dc_processor.Scraper.Scraper.input_data |

Definition at line 130 of file Scraper.py.

◆ itr

| dc_processor.Scraper.Scraper.itr |

Definition at line 127 of file Scraper.py.

◆ logger

| dc_processor.Scraper.Scraper.logger |

Definition at line 131 of file Scraper.py.

◆ mediaLimitsHandler

| dc_processor.Scraper.Scraper.mediaLimitsHandler |

Definition at line 158 of file Scraper.py.

◆ message_queue

| dc_processor.Scraper.Scraper.message_queue |

Definition at line 137 of file Scraper.py.

◆ metrics

| dc_processor.Scraper.Scraper.metrics |

Definition at line 142 of file Scraper.py.

◆ MSG_ERROR_WRONG_CONFIG_FILE_NAME

|

static |

Definition at line 108 of file Scraper.py.

◆ output_data

| dc_processor.Scraper.Scraper.output_data |

Definition at line 149 of file Scraper.py.

◆ outputFormat

| dc_processor.Scraper.Scraper.outputFormat |

Definition at line 140 of file Scraper.py.

◆ processedContent

| dc_processor.Scraper.Scraper.processedContent |

Definition at line 146 of file Scraper.py.

◆ properties

| dc_processor.Scraper.Scraper.properties |

Definition at line 134 of file Scraper.py.

◆ pubdate

| dc_processor.Scraper.Scraper.pubdate |

response.tagsLangDetecting(self.properties[CONSTS.LANG_PROP_NAME])

Definition at line 136 of file Scraper.py.

◆ scraperPropFileName

| dc_processor.Scraper.Scraper.scraperPropFileName |

Definition at line 133 of file Scraper.py.

◆ sqliteTimeout

| dc_processor.Scraper.Scraper.sqliteTimeout |

Definition at line 132 of file Scraper.py.

◆ tagReduceMask

| dc_processor.Scraper.Scraper.tagReduceMask |

Definition at line 160 of file Scraper.py.

◆ tagsCount

| dc_processor.Scraper.Scraper.tagsCount |

Definition at line 144 of file Scraper.py.

◆ tagsMask

| dc_processor.Scraper.Scraper.tagsMask |

Definition at line 145 of file Scraper.py.

◆ tagsTypes

| dc_processor.Scraper.Scraper.tagsTypes |

Definition at line 155 of file Scraper.py.

◆ urlHost

| dc_processor.Scraper.Scraper.urlHost |

Definition at line 150 of file Scraper.py.

◆ urlSourcesRules

| dc_processor.Scraper.Scraper.urlSourcesRules |

Definition at line 159 of file Scraper.py.

◆ usageModel

| dc_processor.Scraper.Scraper.usageModel |

Definition at line 147 of file Scraper.py.

◆ useCurrentYear

| dc_processor.Scraper.Scraper.useCurrentYear |

Definition at line 152 of file Scraper.py.

◆ WWW_PREFIX

|

static |

Definition at line 110 of file Scraper.py.

◆ xpathSplitString

| dc_processor.Scraper.Scraper.xpathSplitString |

Definition at line 151 of file Scraper.py.

The documentation for this class was generated from the following file:

- sources/hce/dc_processor/Scraper.py